Overview

Training deep Reinforcement Learning (RL) agents is computationally expensive, often requiring millions of environment interactions. When visual appearance, task dynamics, or action spaces change—even slightly—most methods require costly retraining from scratch or extensive fine-tuning.

SAPS (Semantic Alignment for Policy Stitching) tackles this problem by enabling zero-shot modular policy reuse. Given independently trained RL agents, SAPS learns a simple affine transformation to map between their latent representations, allowing encoders and controllers from different agents to be “stitched” together without any additional training.

The key insight: independently trained RL encoders learn representations that differ primarily by geometric transformations (rotations, translations), not semantic structure. By estimating these transformations from a small set of semantically aligned “anchor” observations, we can seamlessly compose policies across visual and task variations.

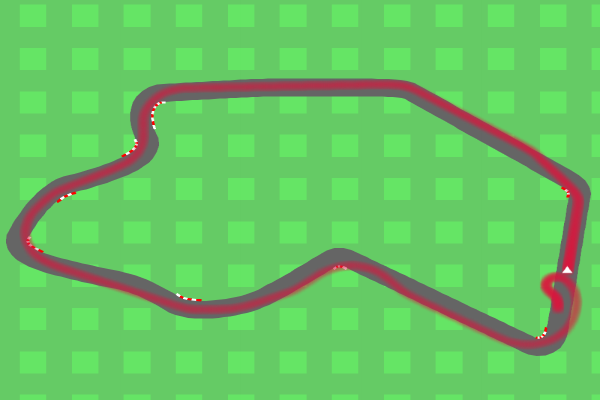

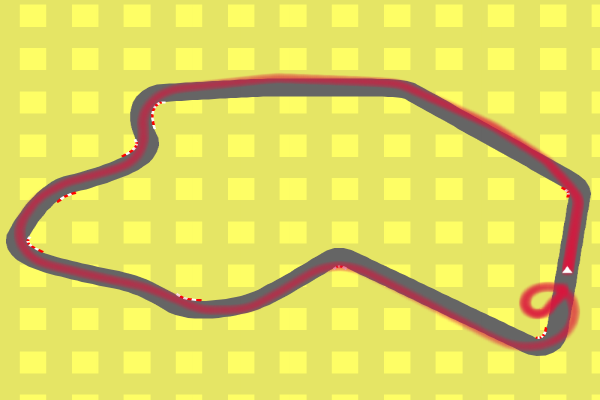

Source encoder trained here

Zero-shot stitched policy works here

Method: Semantic Alignment for Policy Stitching

Core Idea

Independently trained RL encoders learn representations that differ primarily by geometric transformations (rotations, translations), not semantic structure. SAPS estimates these transformations using a small set of semantically aligned "anchor" observations, enabling zero-shot policy composition.The Stitching Problem

Consider two independently trained RL agents:

- Agent u: Trained on environment variation u (e.g., green background, standard speed)

- Agent v: Trained on environment variation v (e.g., red background, slow speed)

Each agent consists of:

- Encoder \(\phi\): Maps observations to latent representations

- Controller \(\psi\): Maps latent representations to actions

Goal: Create a new agent that combines encoder \(\phi_u\) with controller \(\psi_v\) to operate in a new environment variation (u’s visual appearance with v’s task dynamics) without any training.

Affine Transformation via SVD

SAPS estimates an affine transformation \(\tau_u^v: \mathcal{X}_u \rightarrow \mathcal{X}_v\) that maps latent representations from encoder u to be compatible with controller v:

\[\tau_u^v(\mathbf{x}_u) = \mathbf{R} \mathbf{x}_u + \mathbf{b}\]where:

- \(\mathbf{R}\) is an orthogonal rotation matrix obtained via Singular Value Decomposition (SVD)

- \(\mathbf{b}\) is a translation vector

The stitched policy is then:

\[\tilde{\pi}_u^v(\mathbf{o}_u) = \psi_v^j[\tau_u^v(\phi_u^i(\mathbf{o}_u))]\]Anchor Collection

The transformation is estimated from pairs of semantically aligned observations called “anchors”. Two approaches are used:

- Pixel-space transformation: When visual variation is well-defined (e.g., color changes), directly transform observations in pixel space

- Action replay: Replay the same action sequence in both environments (requires deterministic environments with same seed)

From anchor pairs \((\mathbf{a}_u, \mathbf{a}_v)\), we obtain embeddings \((\mathbf{x}_u, \mathbf{x}_v)\) and estimate \(\tau\) via SVD to find the optimal orthogonal alignment.

Experiments: CarRacing

We evaluate SAPS on CarRacing with multiple visual variations (green, red, blue backgrounds, far camera) and task variations (standard, slow speed, scrambled actions, no idle).

Zero-Shot Stitching Performance

| Encoder | Controller | Naive | R3L | SAPS (ours) | Improvement |

|---|---|---|---|---|---|

| Green | Green | 175±304 | 781±108 | 822±62 | +5% |

| Green | Slow | 148±328 | 268±14 | 764±287 | +185% |

| Red | Red | 43±205 | 776±92 | 807±52 | +4% |

| Blue | Blue | 11±122 | 792±48 | 814±52 | +3% |

| Far | Green | 152±204 | 527±142 | 714±45 | +35% |

Key observations:

- Matches or exceeds R3L: SAPS achieves R3L-level performance without specialized training

- Excels on challenging cases: Slow task (764 vs 268 for R3L, +185%) and far camera (714 vs 527 for R3L, +35%)

- Massive improvement over naive: 4-74x better than naive stitching without alignment

- Near end-to-end quality: Performance approaches end-to-end trained models (800-850 range)

Experiments: LunarLander

LunarLander tests SAPS on a precision control task with visual variations (white vs red background) and physics variations (gravity -10 vs -3).

Results: Success and Limitations

| Encoder | Controller | Naive | SAPS (ours) | End-to-end |

|---|---|---|---|---|

| White (g=-10) | White (g=-10) | -413±72 | 19±56 | 221±86 |

| White (g=-10) | Red (g=-10) | -390±176 | 8±60 | - |

| White (g=-10) | White (g=-3) | -276±8 | -242±51 | - |

| Red (g=-10) | White (g=-10) | -444±116 | 52±44 | 192±30 |

Key findings:

- Same gravity stitching: SAPS achieves positive scores (8-52), massive improvement over naive (-390 to -444)

- Cross-gravity stitching: SAPS fails (-242), highlighting limitations of affine transformations for physics variations

- Interpretation: Precision landing task is highly sensitive to latent space misalignment; small errors cascade into crashes

The LunarLander results reveal an important principle: affine transformations work well for visual variations and robust control tasks, but struggle with precision control and physics variations.

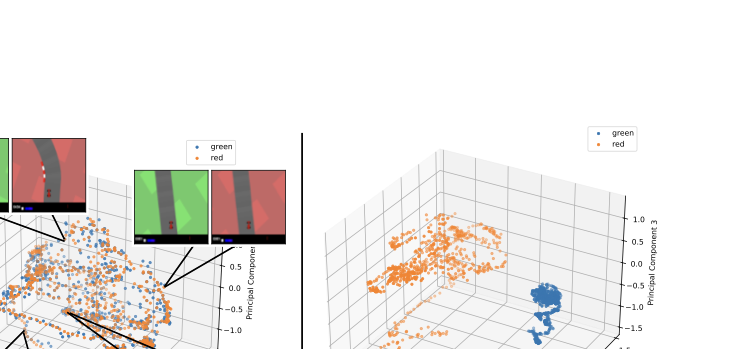

Latent Space Analysis

PCA Visualization

We visualize how SAPS aligns latent spaces by projecting encoder outputs to 3D via PCA.

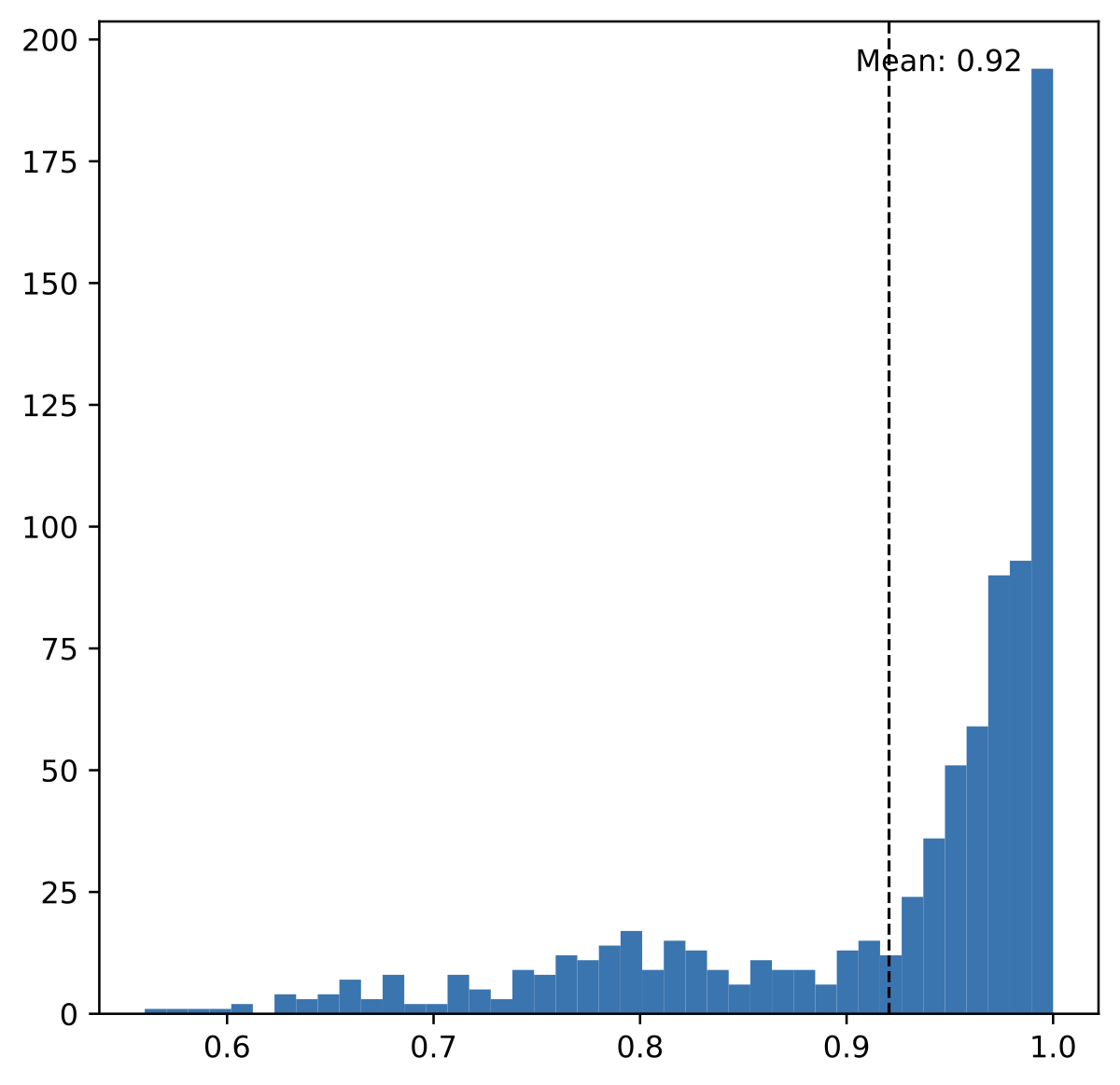

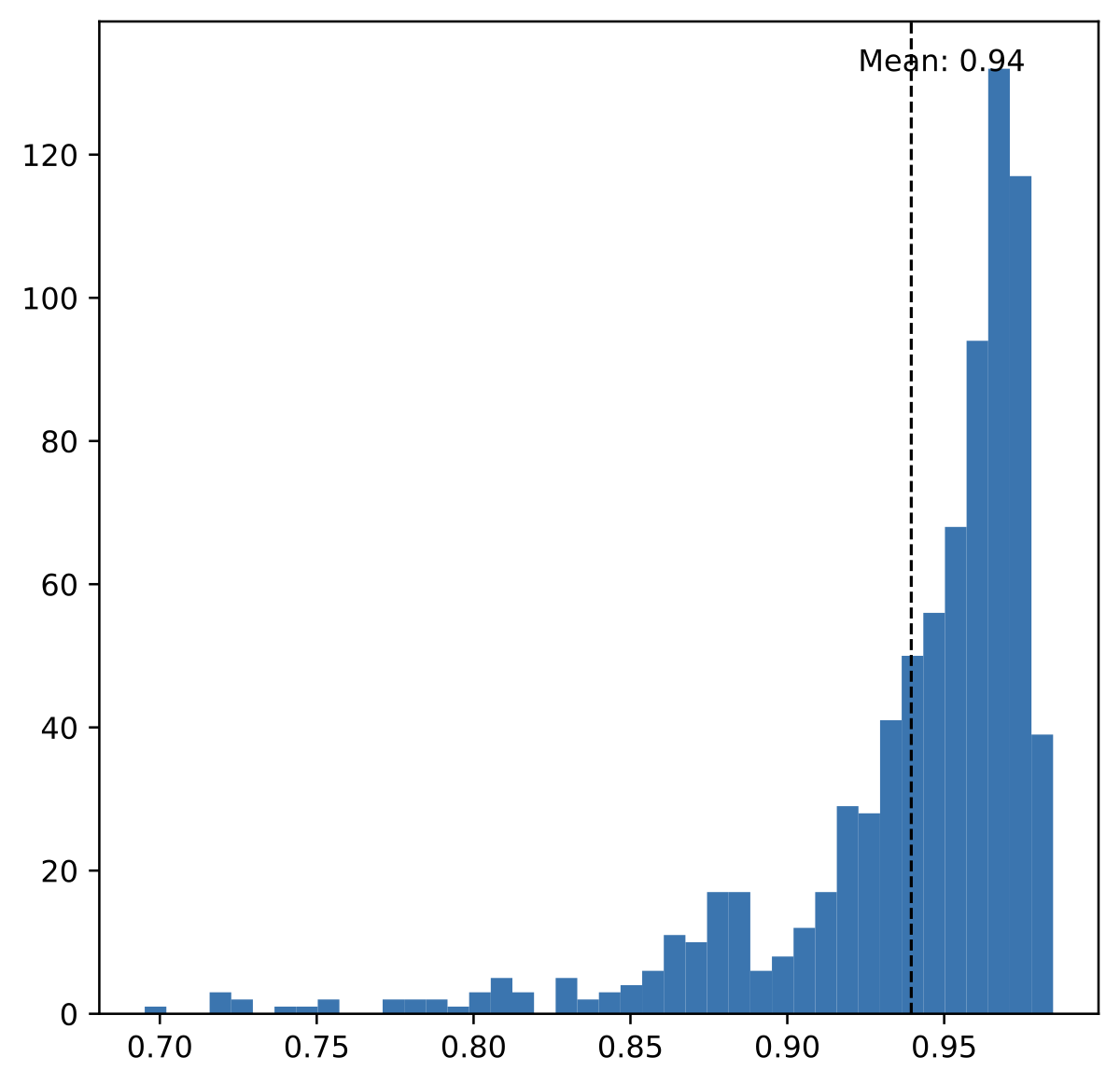

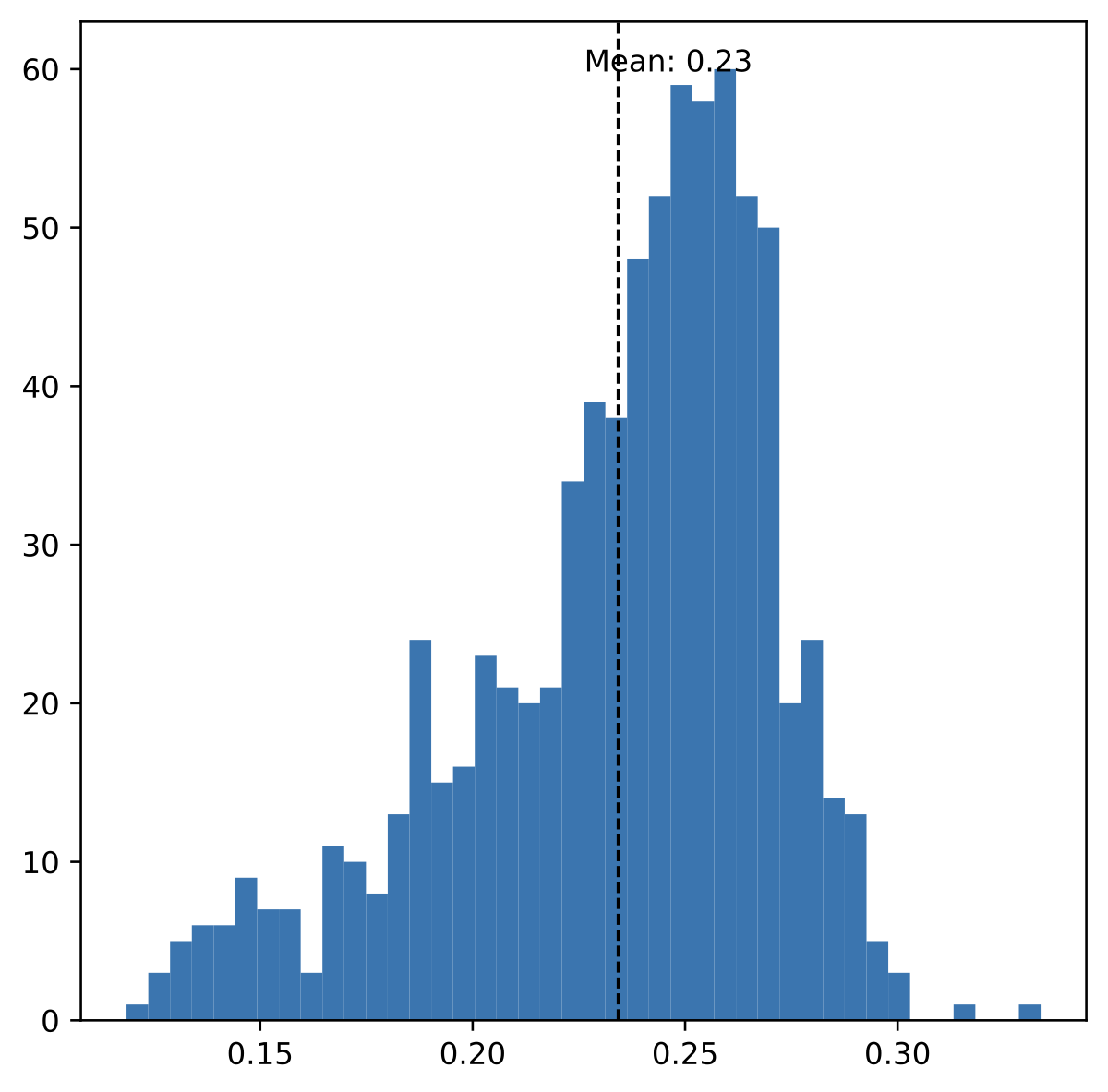

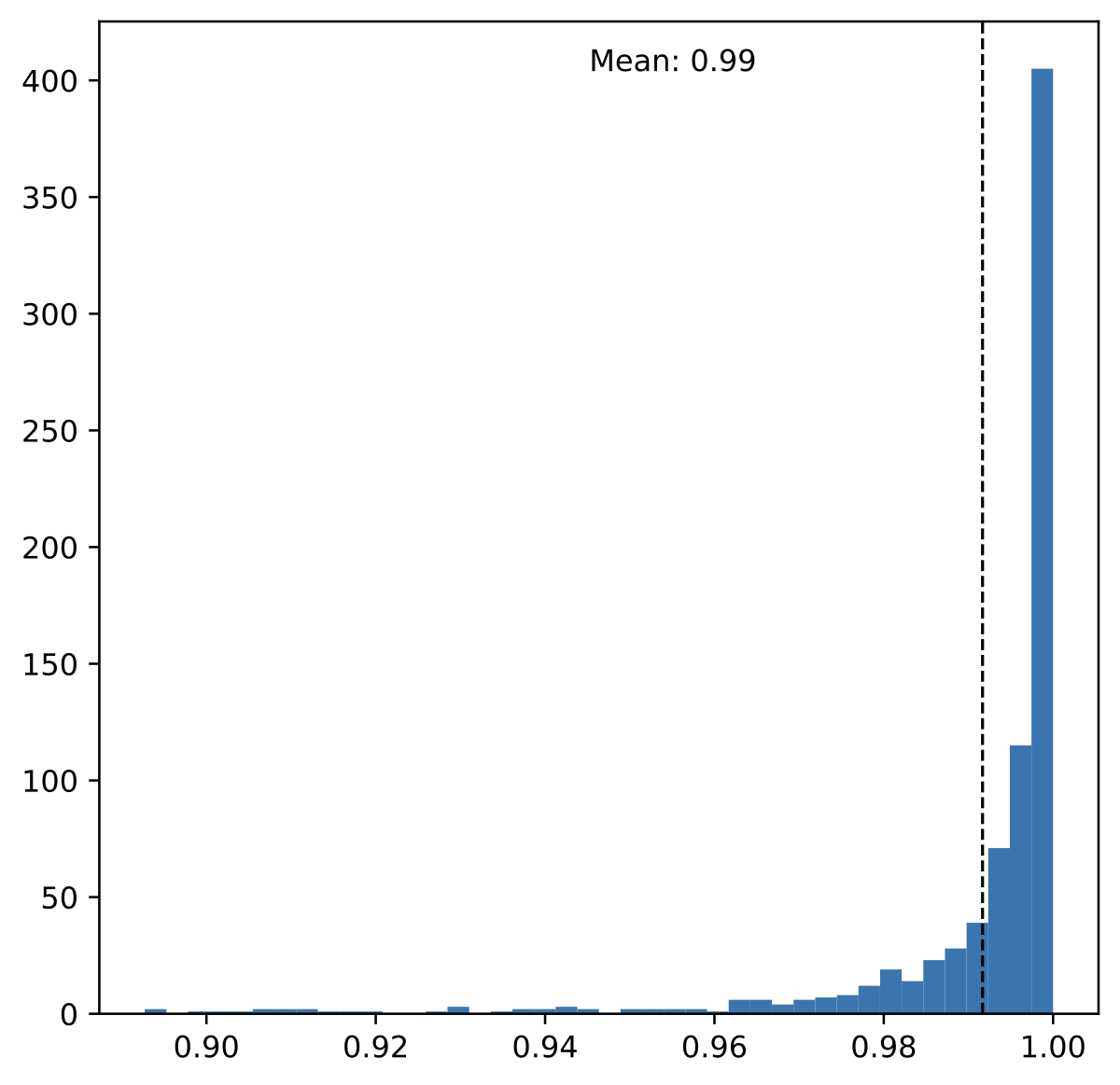

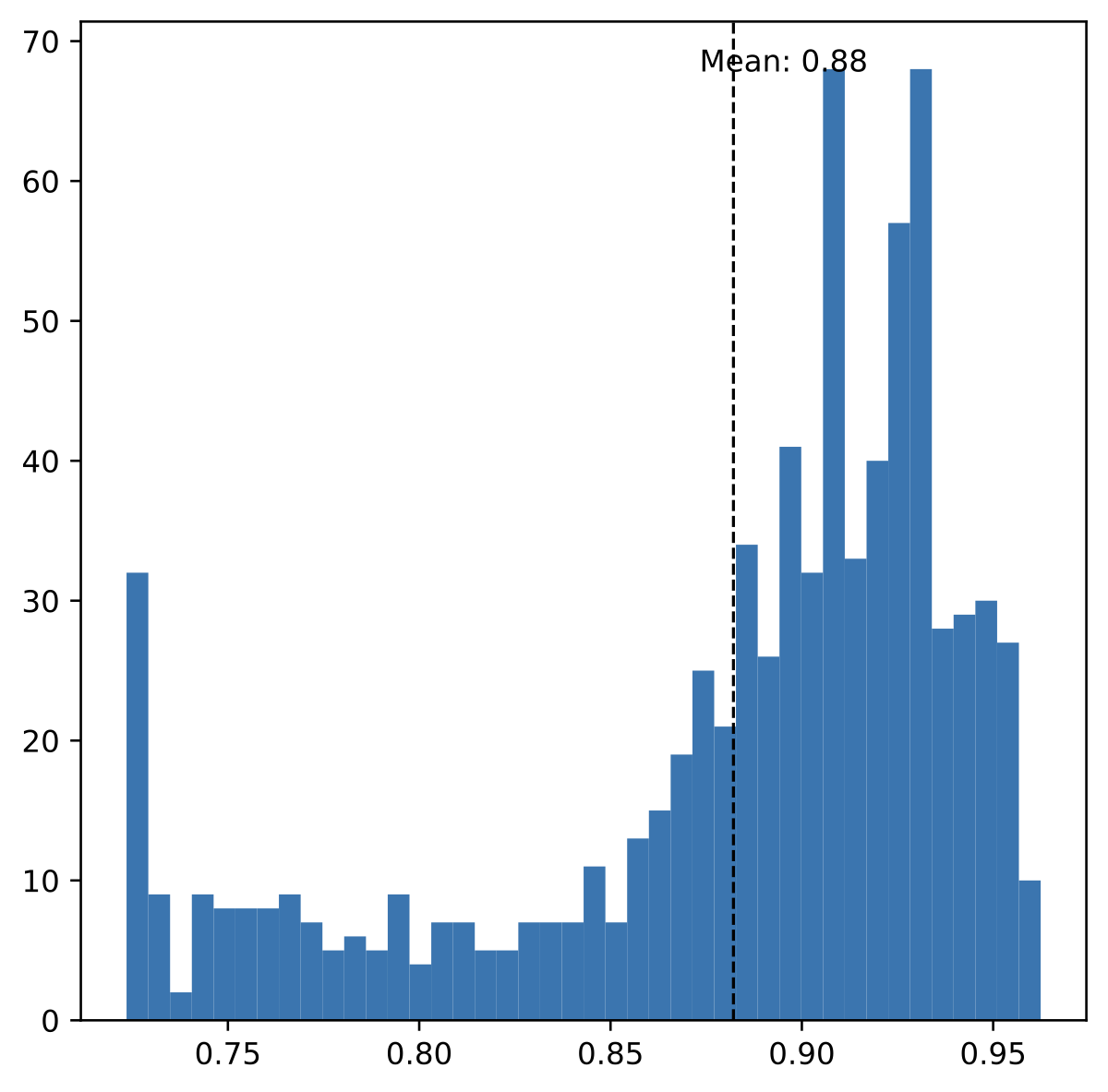

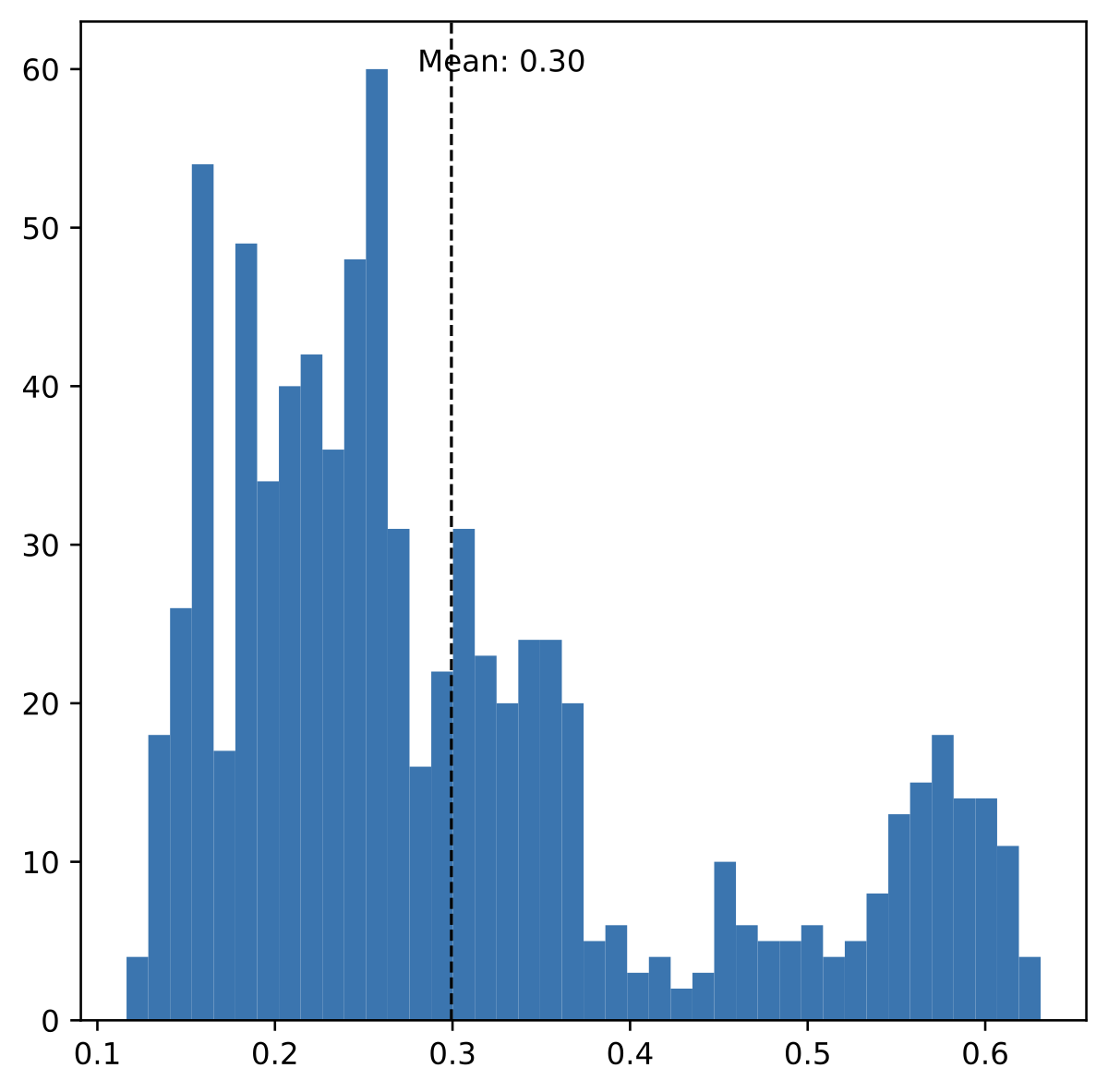

Cosine Similarity Analysis

We measure pairwise cosine similarity between matched frames from different environment variations:

Quantitative results:

- SAPS/R3L: Mean cosine similarity 0.92-0.99 across both environments

- Naive: Mean cosine similarity 0.23-0.30

- Interpretation: Independently trained models exhibit near-identical encodings for semantically identical frames once aligned

Key Findings

-

Zero-shot stitching works for robust tasks: SAPS enables modular policy composition across visual and task variations in CarRacing, achieving performance comparable to end-to-end training without any fine-tuning.

-

Near-perfect latent alignment: Cosine similarity analysis (0.92-0.99) confirms that independently trained RL encoders learn quasi-isometric representations differing primarily by geometric transformations.

-

Practical advantage over R3L: SAPS achieves R3L-level alignment and performance using standard pre-trained models, without requiring specialized relative representation training.

-

Precision control reveals limits: LunarLander results show that affine transformations have boundaries—they handle visual variations well but struggle with physics variations and precision-sensitive tasks.

-

Task robustness as moderator: The gap between alignment quality and task performance highlights that robust control tasks (navigation) tolerate small alignment errors, while precision tasks (landing) amplify them.

-

Enables compositional RL: By treating encoders and controllers as modular components connected via learned transformations, SAPS opens the door to compositional RL where policies can be assembled like LEGO blocks.

Citation

@article{ricciardi2025zeroshot,

title = {Mapping representations in Reinforcement Learning via Semantic Alignment for Zero-Shot Stitching},

author = {Antonio Pio Ricciardi and Valentino Maiorca and Luca Moschella and

Riccardo Marin and Emanuele Rodol{\`a}},

journal = {arXiv preprint arXiv:2503.01881},

year = {2025}

}

Authors

Antonio Pio Ricciardi¹ · Valentino Maiorca¹ · Luca Moschella¹ · Riccardo Marin² · Emanuele Rodolà¹

¹Sapienza University of Rome, Italy · ²University of Tübingen, Germany