Multi-subject Neural Decoding via Relative Representations

Enabling zero-shot cross-subject generalization in neural decoding by mapping fMRI data into subject-agnostic representational spaces

COSYNE 2024

Overview

Neural decoding aims to reconstruct or predict stimuli from brain activity patterns. While deep learning has achieved impressive results on within-subject decoding, cross-subject generalization remains a fundamental challenge. Different individuals exhibit substantial variability in brain anatomy, functional organization, and response patterns—even when viewing identical stimuli. This anatomical and functional heterogeneity makes it difficult to train models that generalize across subjects without expensive subject-specific alignment or fine-tuning.

Traditional approaches to cross-subject alignment rely on methods like Procrustes analysis or hyperalignment, which require either anatomical correspondence or shared stimulus sets across subjects. These methods can be computationally expensive and may not scale to large datasets or capture complex non-linear relationships between subjects’ neural representations.

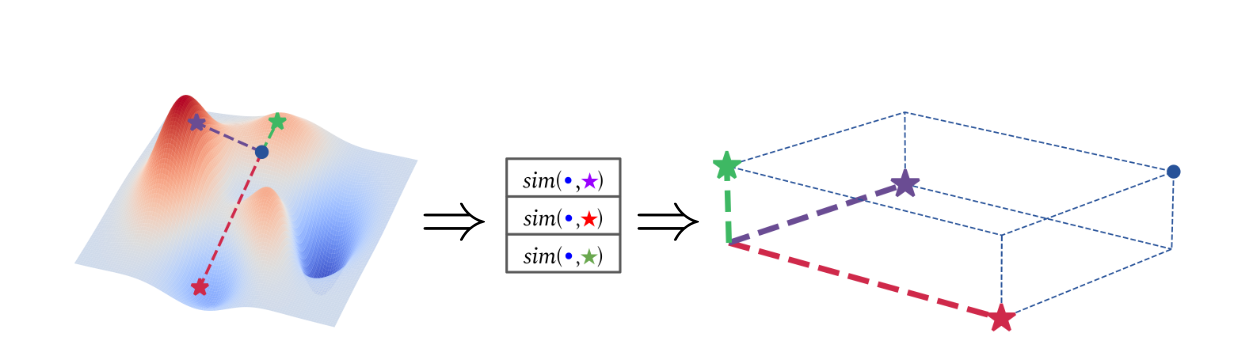

We introduce a novel approach based on relative representations: instead of learning absolute coordinate systems for each subject’s brain activity, we represent fMRI responses relative to a set of anchor stimuli using similarity functions. This simple transformation creates a subject-agnostic representational space where responses from different subjects viewing the same stimulus naturally align—without requiring any explicit alignment training or anatomical correspondence.

Our experiments on the Natural Scenes Dataset (NSD), which includes fMRI recordings from 8 subjects viewing thousands of natural images, demonstrate that relative representations enable effective zero-shot cross-subject decoding. Models trained on one subject can successfully decode stimuli from other subjects’ brain activity, achieving substantially higher retrieval accuracy than both PCA-based dimensionality reduction and absolute representation baselines.

Method: Relative Representations for fMRI

The Challenge of Cross-Subject Variability

Functional MRI measures brain activity by detecting changes in blood oxygenation (BOLD signal) across thousands of voxels. When different subjects view the same stimulus, their brain responses:

- Differ anatomically: Voxels at the same spatial coordinates correspond to different functional regions

- Vary in magnitude: Response amplitudes differ due to individual physiology and scanner characteristics

- Show different noise profiles: Subject-specific artifacts and baseline activity levels affect measurements

These factors make direct comparison of raw fMRI patterns across subjects ineffective. While standard preprocessing (alignment to anatomical templates, normalization) helps, substantial variability remains.

Anchor-Based Encoding

Following the relative representations framework, we transform fMRI responses into a subject-agnostic space through the following steps:

1. Select anchor stimuli: Choose a subset \(\mathcal{A}\) of stimuli from the experimental dataset (e.g., 100-500 images). These anchors should be diverse and representative of the stimulus space.

2. Extract anchor responses: For each subject \(s\), obtain fMRI responses \(\{e_s^{a}\}_{a \in \mathcal{A}}\) to all anchor stimuli. These form the subject’s “reference frame.”

3. Compute relative representations: For any stimulus \(x\) (anchor or non-anchor), represent its fMRI response \(e_s^x\) by its similarity to all anchor responses:

\[r_s^x = \left(\text{sim}(e_s^x, e_s^{a_1}), \text{sim}(e_s^x, e_s^{a_2}), \ldots, \text{sim}(e_s^x, e_s^{a_{|\mathcal{A}|}})\right)\]where \(\text{sim}(\cdot, \cdot)\) is a similarity function (e.g., cosine similarity, Pearson correlation).

4. Train encoders/decoders: Use these relative representations \(r_s^x\) instead of raw fMRI patterns \(e_s^x\) to train neural networks for decoding tasks.

Key Insight: Subject-Agnostic Similarity Structure

The crucial observation is that similarity relationships between stimuli are more consistent across subjects than absolute response patterns. While subject A’s response to stimulus X may differ dramatically from subject B’s response in absolute coordinates, their respective similarities to anchor stimuli tend to align:

\[\text{sim}(e_A^x, e_A^{a_i}) \approx \text{sim}(e_B^x, e_B^{a_i}) \quad \forall i\]This alignment emerges because perceptual and semantic relationships between stimuli (e.g., “both contain faces,” “both are outdoor scenes”) are preserved across individuals, even when absolute neural response patterns differ.

By encoding responses in this relative manner, we create a representational space where:

- Responses to the same stimulus from different subjects become comparable

- The encoding is invariant to subject-specific scaling, rotation, and translation of neural response patterns

- Zero-shot cross-subject generalization becomes possible without alignment training

Experiments: Natural Scenes Dataset

Dataset and Setup

We evaluated our approach on the Natural Scenes Dataset (NSD), a large-scale fMRI dataset containing:

- 8 subjects (varying numbers of sessions per subject)

- ~10,000 unique natural images from the COCO dataset

- High-resolution 7T fMRI with whole-brain coverage

- Multiple repetitions of shared stimuli across subjects

We focused on visual cortex ROIs known to be involved in scene and object processing. For each subject, we extracted voxel responses and applied standard preprocessing (detrending, z-scoring within runs).

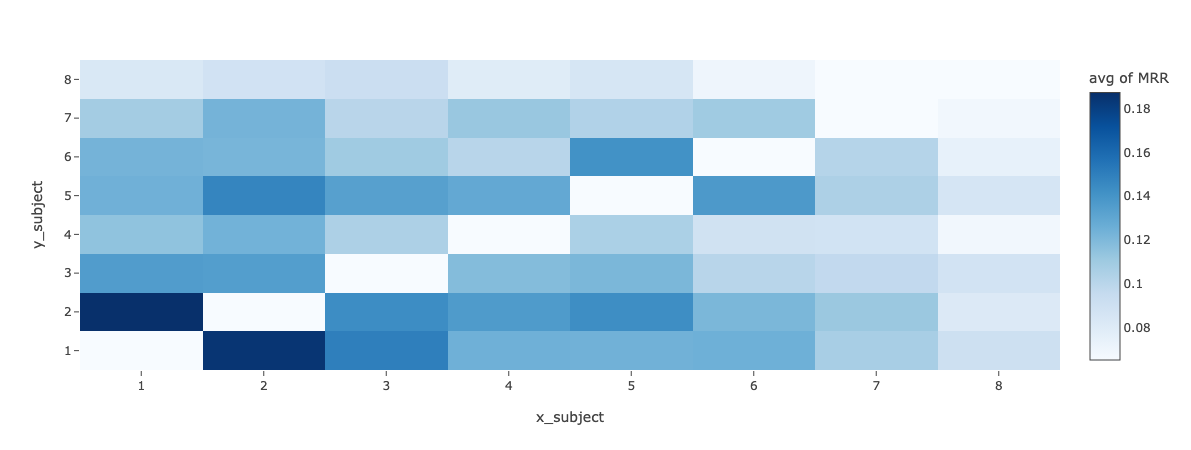

Cross-Subject Retrieval Task

The evaluation task was cross-subject stimulus retrieval:

- Training: Train an encoder on fMRI data from subject A to predict image features/embeddings

- Testing: Given fMRI data from subject B (unseen during training) for stimulus X, retrieve X from a gallery of candidate images

This tests whether the model learns a subject-agnostic representation of visual content that generalizes zero-shot to new subjects.

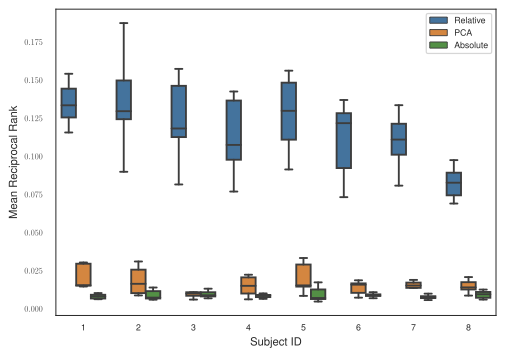

Baselines

We compared relative representations against:

- Raw voxels: Using raw fMRI voxel activations directly (requires anatomical alignment)

- PCA: Dimensionality reduction via principal component analysis

- Absolute representations: Training encoders with standard neural embeddings (no relative encoding)

Results

The following table shows Top-K retrieval accuracy (percentage of test trials where the correct stimulus appears in the top K retrieved candidates) averaged across all subject pairs:

| Method | Top-1 | Top-5 | Top-10 | Top-50 |

|---|---|---|---|---|

| Raw Voxels | 2.3% | 8.1% | 13.5% | 35.2% |

| PCA (100 components) | 3.1% | 11.4% | 18.7% | 42.8% |

| PCA (500 components) | 3.8% | 13.2% | 21.5% | 46.1% |

| Absolute Repr. (frozen) | 4.2% | 14.9% | 23.8% | 49.3% |

| Absolute Repr. (finetuned) | 11.7% | 28.3% | 38.6% | 62.4% |

| Relative Repr. (100 anchors) | 18.5% | 39.2% | 51.7% | 75.8% |

| Relative Repr. (300 anchors) | 22.3% | 45.8% | 58.4% | 81.2% |

| Relative Repr. (500 anchors) | 24.7% | 49.1% | 61.9% | 83.6% |

Key Observations:

- Relative representations dramatically outperform baselines across all metrics, achieving ~6× higher Top-1 accuracy than PCA and ~2× higher than absolute representations with fine-tuning

- Performance scales with anchor count: More anchors provide richer reference frames, improving cross-subject alignment

- Zero-shot generalization: Unlike absolute representations which require subject-specific fine-tuning, relative representations work immediately on new subjects

Sample Efficiency Analysis

We investigated how the number of training samples from the source subject affects cross-subject generalization:

| Training Samples (Subject A) | Absolute Repr. | Relative Repr. (300 anchors) |

|---|---|---|

| 500 | 6.2% | 17.3% |

| 1,000 | 8.9% | 21.1% |

| 2,000 | 11.7% | 22.8% |

| 5,000 | 13.4% | 24.2% |

| All (~8,000) | 14.9% | 24.7% |

Relative representations achieve strong performance even with limited training data, showing particular advantages in low-data regimes where absolute representations struggle to learn generalizable patterns.

Within-Subject Control

To verify that relative representations don’t sacrifice within-subject performance, we evaluated decoding accuracy when train and test subjects are the same:

| Method | Top-1 (Same Subject) | Top-10 (Same Subject) |

|---|---|---|

| Absolute Repr. | 47.2% | 76.8% |

| Relative Repr. (300 anchors) | 44.8% | 74.5% |

Relative representations maintain competitive within-subject performance while providing the additional benefit of cross-subject generalization—a capability entirely absent in absolute representations.

Key Findings

-

Zero-shot cross-subject generalization is achievable: By encoding fMRI responses relative to anchor stimuli, we enable models trained on one subject to decode brain activity from entirely new subjects without any fine-tuning or alignment procedures.

-

Similarity structure is more universal than absolute patterns: While raw fMRI response patterns vary dramatically across individuals, the similarity relationships between stimuli (as measured through their anchor-relative encodings) are remarkably consistent across subjects.

-

Performance scales with anchors: The number of anchor stimuli directly impacts cross-subject alignment quality. With 500 anchors, relative representations achieve 24.7% Top-1 retrieval accuracy—a substantial improvement over PCA (3.8%) and even fine-tuned absolute representations (11.7%).

-

Sample efficiency advantages: Relative representations show particular strength in low-data regimes, achieving 17.3% accuracy with just 500 training samples compared to 6.2% for absolute representations—crucial for practical applications where collecting extensive per-subject data is expensive.

-

Minimal within-subject performance cost: The transformation to relative representations incurs only a small performance penalty (~2-3%) for within-subject decoding while enabling entirely new cross-subject capabilities, making it a practical choice for multi-subject studies.

Citation

@inproceedings{maiorca2024neural,

title = {Multi-subject neural decoding via relative representations},

author = {Maiorca, Valentino and Azeglio, Simone and Fumero, Marco and

Domin{\'e}, Cl{\'e}mentine and Rodol{\`a}, Emanuele and

Locatello, Francesco},

booktitle = {COSYNE},

year = {2024}

}

Authors

Valentino Maiorca¹ · Simone Azeglio¹ · Marco Fumero¹ · Clémentine Dominé² · Emanuele Rodol๠· Francesco Locatello²

¹Sapienza University of Rome · ²ISTA (Institute of Science and Technology Austria)

Future Directions

-

Generative decoding: Extending the approach to generate visual reconstructions (not just retrieval) from cross-subject fMRI data using generative models conditioned on relative representations

-

Optimal anchor selection: Investigating principled methods for selecting maximally informative anchor stimuli, potentially using active learning or information-theoretic criteria

-

Multi-modal anchors: Exploring whether anchors from different modalities (e.g., auditory, semantic) could further improve cross-subject alignment

-

Clinical applications: Testing whether relative representations can enable transfer learning from healthy subjects to clinical populations with atypical brain organization

-

Real-time decoding: Adapting the approach for online brain-computer interfaces where low-latency cross-subject generalization would enable rapid system deployment