LinEAS: End-to-end Learning of Activation Steering with a Distributional Loss

Controlling generative models through learned affine transformations on activations

NeurIPS 2025

Overview

Controlling the behavior of large generative models—steering them away from toxic outputs or towards desired stylistic attributes—is crucial for safe and useful AI systems. However, existing approaches typically require extensive retraining, large paired datasets, or manual intervention.

LinEAS (Linear End-to-end Activation Steering) addresses this challenge by learning simple affine transformations applied to a model’s internal activations.

Our method combines three key innovations:

- Distributional loss using optimal transport: Rather than requiring paired examples, we match the distribution of model outputs to a target distribution using Wasserstein distance

- End-to-end learning across all layers: We jointly optimize steering vectors across the entire network, allowing the method to discover which layers are most effective for control

- Automatic neuron selection via sparsity: L1 regularization automatically identifies the minimal set of neurons needed for steering, requiring only 32 unpaired samples

Method

Problem Formulation

Given a pre-trained generative model \(f_\theta\), we want to steer its outputs from an initial distribution \(\mathcal{S}\) (e.g., toxic text) towards a target distribution \(\mathcal{T}\) (e.g., non-toxic text). Unlike supervised approaches, we only have access to unpaired samples from each distribution.

Affine Activation Steering

We parameterize steering as affine transformations applied to the model’s internal activations at each layer \(\ell\):

\[h^\ell_{\text{steered}} = \text{diag}(\alpha^\ell) \cdot h^\ell + \beta^\ell\]where \(h^\ell \in \mathbb{R}^d\) are the activations at layer \(\ell\), and \(\alpha^\ell, \beta^\ell \in \mathbb{R}^d\) are learnable scaling and shift parameters.

Optimal Transport Loss

To train these parameters without paired data, we minimize the Wasserstein distance between the distribution of steered outputs and the target distribution:

\[\mathcal{L}_{OT} = W_2(\mathcal{P}_{\text{steered}}, \mathcal{T})\]This distributional loss allows learning from unpaired examples—we don’t need to know which specific input should map to which target output, only that the overall distribution should match.

Sparse Regularization

To automatically select relevant neurons and prevent overfitting with limited data, we add L1 regularization:

\[\mathcal{L}_{\text{total}} = \mathcal{L}_{OT} + \lambda \|\alpha\|_1\]This encourages sparse steering vectors, typically activating only a small fraction of neurons at each layer. Remarkably, effective steering can be learned with as few as 32 unpaired samples per distribution.

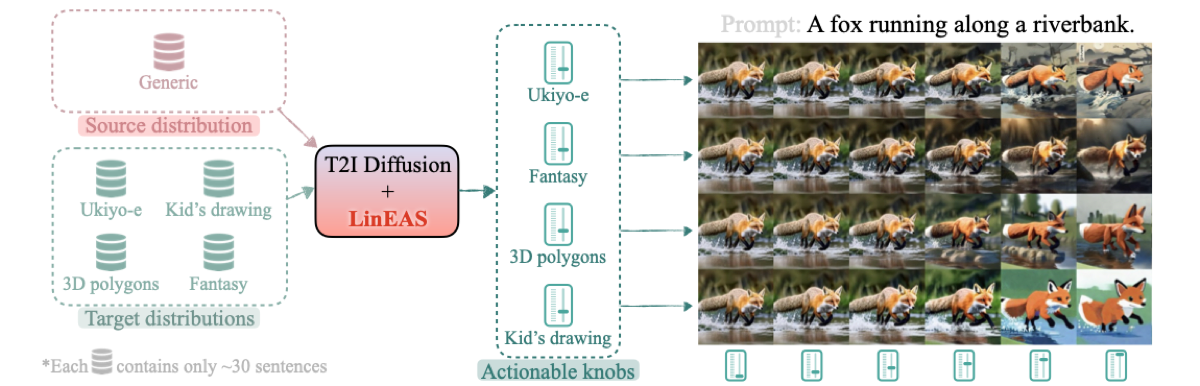

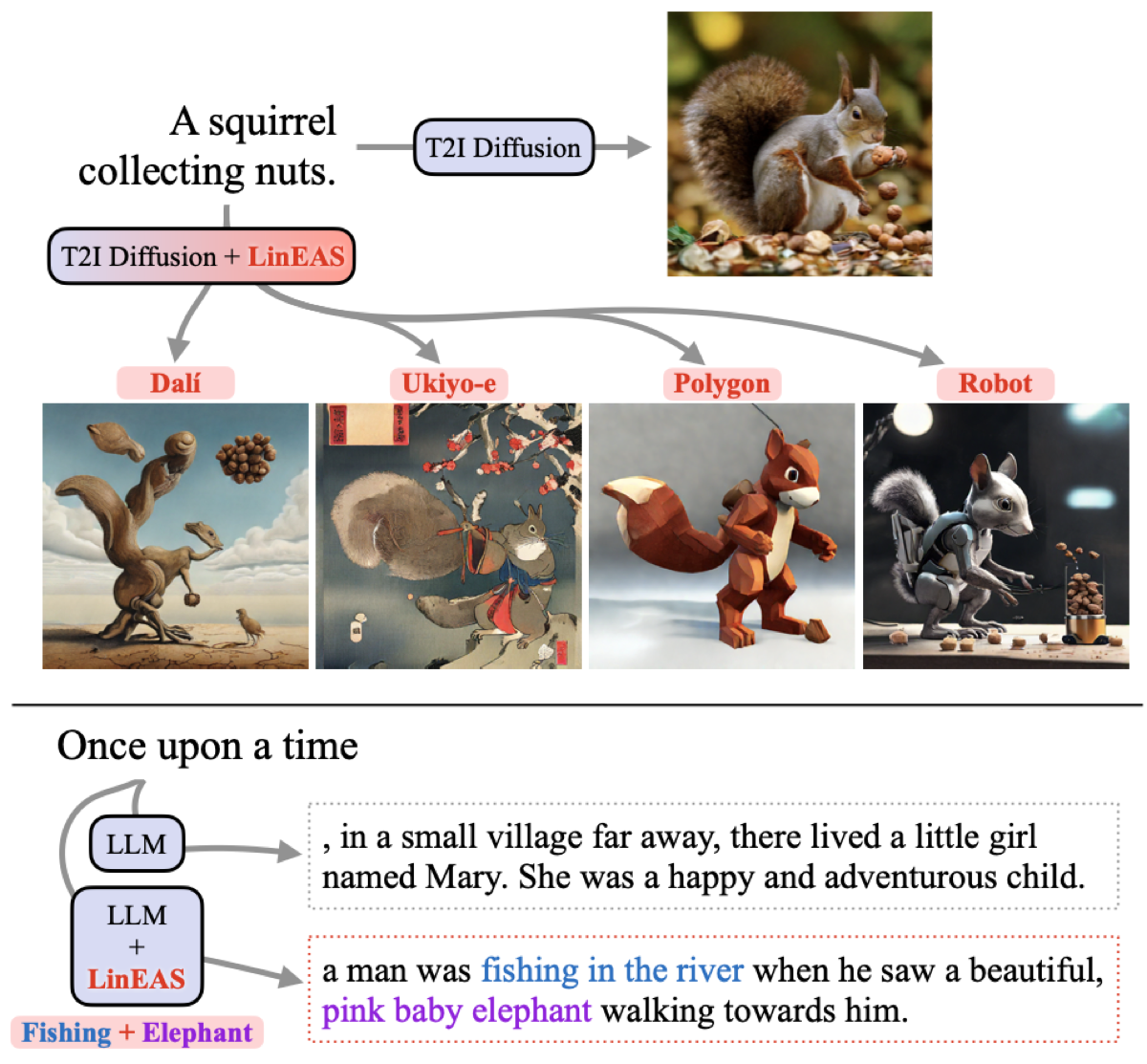

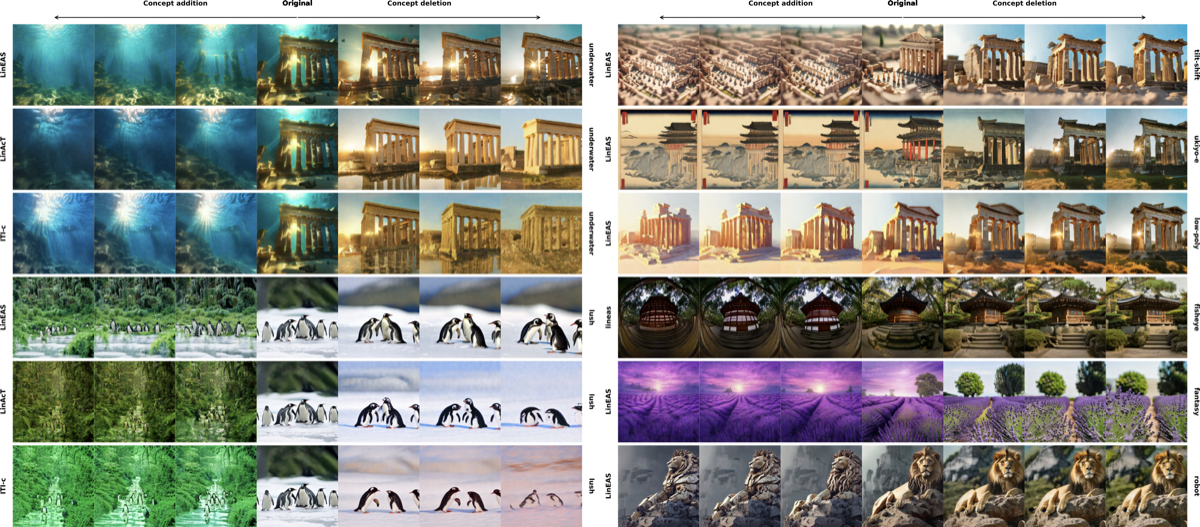

Text-to-Image Generation: Style Control

One of the most visually striking applications of LinEAS is controlling artistic style in text-to-image diffusion models. With just 32 example images per style, we can steer Stable Diffusion to generate images in specific artistic styles.

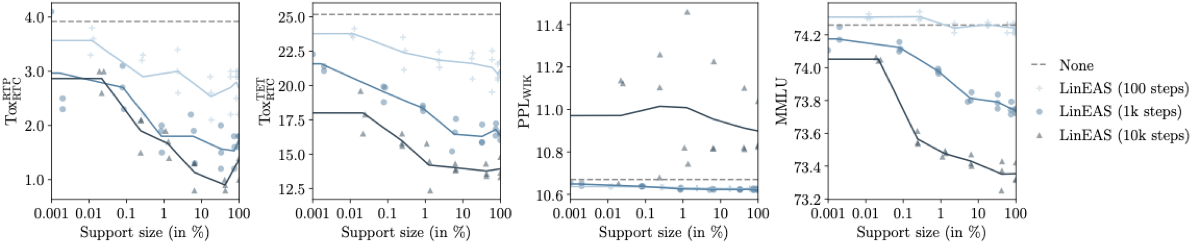

Toxicity Mitigation in Language Models

We evaluate LinEAS on reducing toxic content generation in large language models using multiple benchmarks.

Results:

- 40-60% toxicity reduction compared to the base model across multiple metrics

- Minimal fluency degradation: Perplexity increases by less than 10%

- Sample efficiency: 10× fewer examples than gradient-based baselines

- Interpretable interventions: Sparse activation patterns reveal which layers and neurons are most responsible for toxicity

Comparison with Other Methods

Ablation Studies

Key findings from ablations:

| Component | Performance Impact |

|---|---|

| End-to-end optimization | +25-30% vs. sequential |

| Sparse regularization (L1) | +15-20% with <64 samples |

| Optimal transport loss | +20% vs. MSE loss |

| Middle layers | Contribute most to steering |

Key Findings

-

Distributional losses enable unpaired learning: By matching distributions via optimal transport, we can learn effective steering without expensive paired data collection—just 32 unpaired examples suffice.

-

Sparsity is crucial for sample efficiency: L1 regularization automatically identifies minimal steering interventions (5-20% of neurons per layer), enabling robust learning from limited data.

-

End-to-end optimization outperforms layer-wise approaches: Joint optimization across layers discovers more effective steering strategies than sequential methods, with 25-30% improvement.

-

LinEAS generalizes across modalities: The same framework works for language models (toxicity mitigation) and diffusion models (style control), suggesting broad applicability to generative AI systems.

-

Practical control without retraining: Steering vectors can be computed in minutes and applied at inference time without modifying model weights, enabling flexible post-hoc control.

Citation

@inproceedings{lineas,

title = {LinEAS: End-to-end Learning of Activation Steering with a Distributional Loss},

author = {Rodriguez, Pau and Klein, Michael and Gualdoni, Eleonora and

Maiorca, Valentino and Blaas, Arno and Zappella, Luca and

Cuturi, Marco and Suau, Xavier},

booktitle = {The Thirty-ninth Annual Conference on Neural Information Processing Systems},

year = {2025}

}

Authors

Pau Rodriguez¹ · Michael Klein¹ · Eleonora Gualdoni¹ · Valentino Maiorca² · Arno Blaas¹ · Luca Zappella¹ · Marco Cuturi¹ · Xavier Suau¹

¹Apple · ²Sapienza University of Rome