Overview

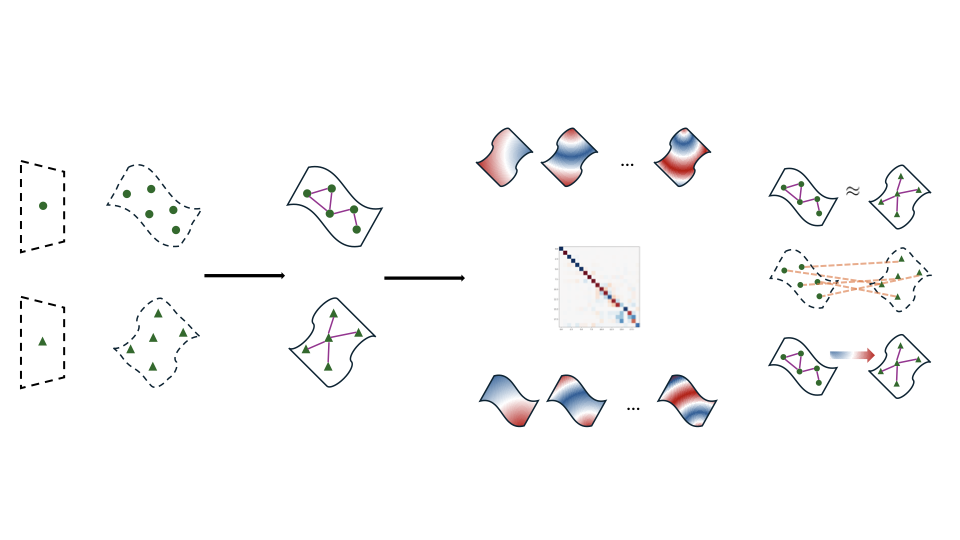

Neural networks learn data representations that lie on low-dimensional manifolds, yet modeling the relationship between these representational spaces remains an ongoing challenge. Latent Functional Maps (LFM) introduces a spectral framework that addresses this problem in the functional domain, bringing together principles from spectral geometry and representation learning.

By adapting the functional maps framework from 3D geometry processing to neural latent spaces, LFM provides a versatile tool that enables three key capabilities:

- Compare different representational spaces in an interpretable way and measure their intrinsic similarity

- Find correspondences between spaces, both in unsupervised and weakly supervised settings

- Transfer representations effectively between distinct spaces

Spectral Framework: From Geometry to Representation Learning

Building the Graph Representation

To leverage the geometry of the underlying manifold, LFM models the latent space by constructing a symmetric k-nearest neighbor (k-NN) graph. Given samples \(X = \{x_1, \ldots, x_n\}\) from a latent space \(\mathcal{X}\), we build an undirected weighted graph \(G = (X, E, \mathbf{W})\) where edges connect nearby points in the latent space.

We then compute the graph Laplacian \(\mathcal{L}_G = \mathbf{I} - \mathbf{D}^{-1/2} \mathbf{W} \mathbf{D}^{-1/2}\) and its eigendecomposition \(\mathcal{L}_G = \mathbf{\Phi}_G \mathbf{\Lambda}_G \mathbf{\Phi}_G^T\). The eigenvectors \(\mathbf{\Phi}_G\) form an orthonormal basis for functions defined on the graph, providing a spectral representation of the latent space.

Computing Latent Functional Maps

Given two latent spaces \(\mathcal{X}\) and \(\mathcal{Y}\) with their graph Laplacians and eigenbases, we compute a functional map \(\mathbf{C}\) that transforms functions between these spaces. The optimization problem is:

\[\underset{\mathbf{C}}{\mathrm{argmin}} \| \mathbf{C} \hat{\mathbf{F}}_{G_X}- \hat{\mathbf{F}}_{G_Y} \|_F^2 + \alpha \rho_{\mathcal{L}}(\mathbf{C}) + \beta \rho_{f}(\mathbf{C})\]where \(\hat{\mathbf{F}}_G = \mathbf{\Phi}_{G}^T \mathbf{F}_G\) are the spectral coefficients of descriptor functions \(\mathbf{F}_G\), and \(\rho_{\mathcal{L}}\), \(\rho_{f}\) are regularizers enforcing Laplacian and descriptor commutativity.

Descriptor Functions

Descriptors encode information shared between spaces \(\mathcal{X}\) and \(\mathcal{Y}\):

- Supervised: Distance functions from known anchor points (partial correspondences)

- Weakly supervised: Equivalence relations like label assignments (multi-to-multi mappings)

- Unsupervised: Geometric quantities that depend only on the graph topology (e.g., Heat Kernel Signature)

This flexibility allows LFM to work with varying amounts of supervision, from zero anchors to full correspondence information.

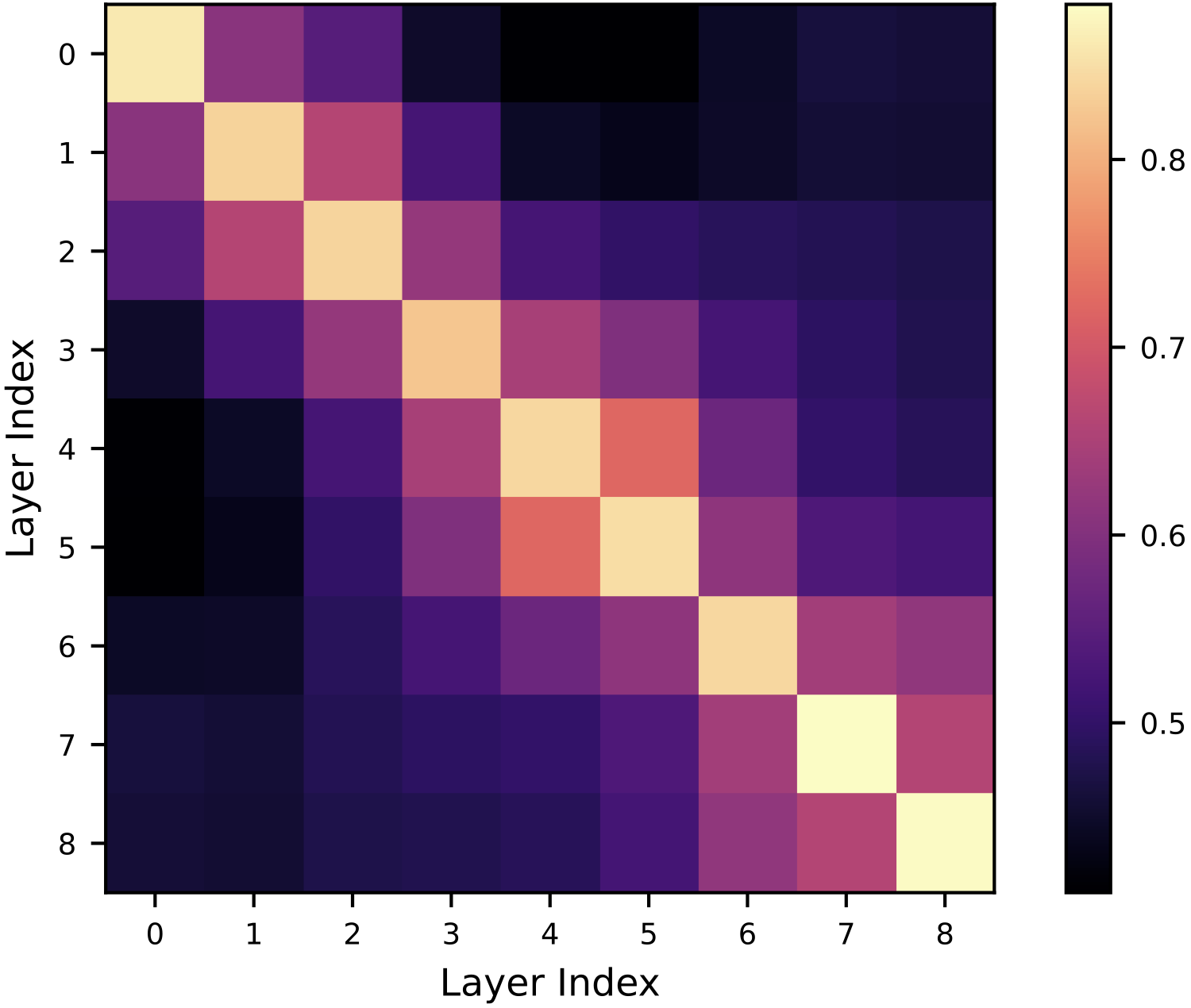

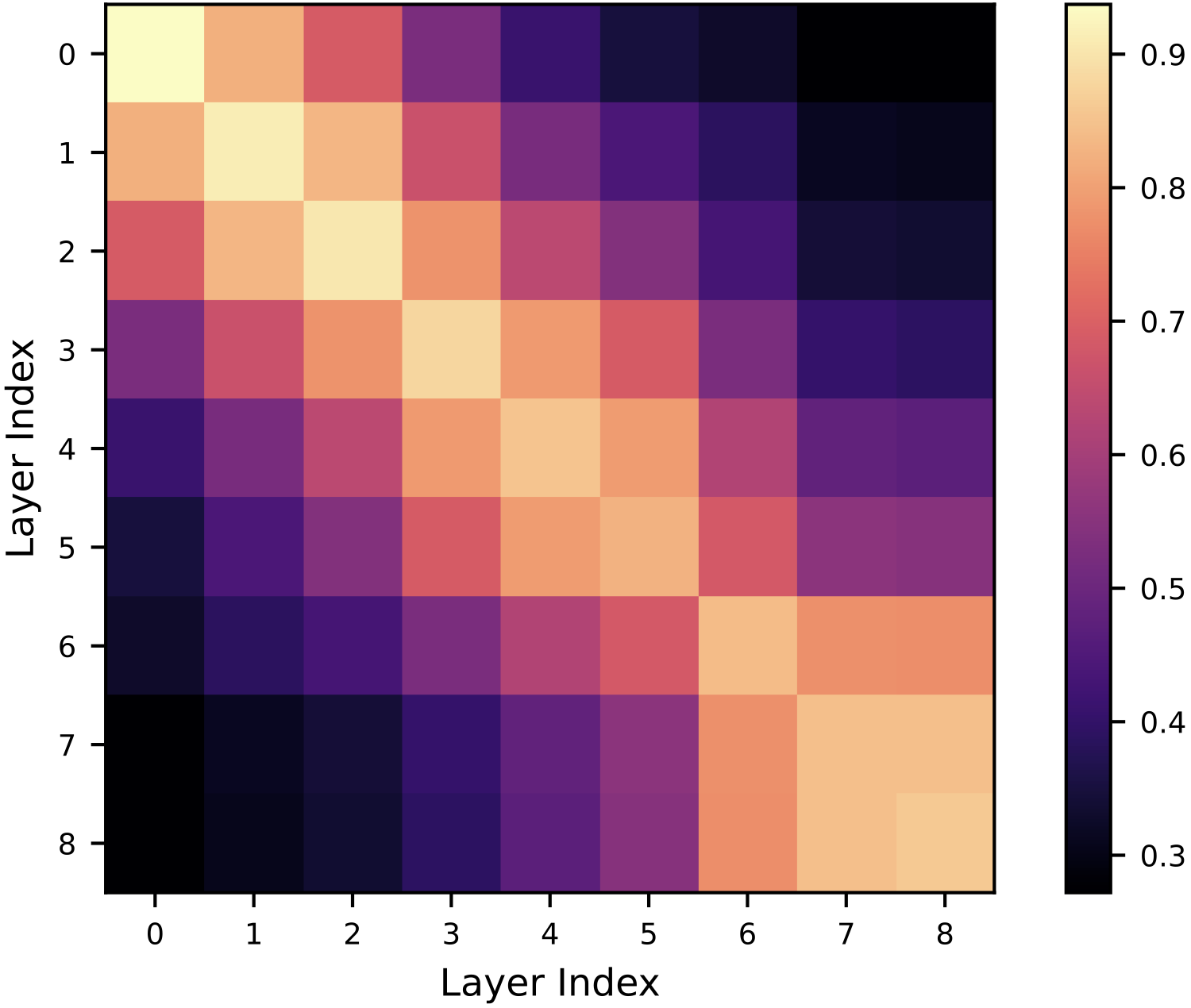

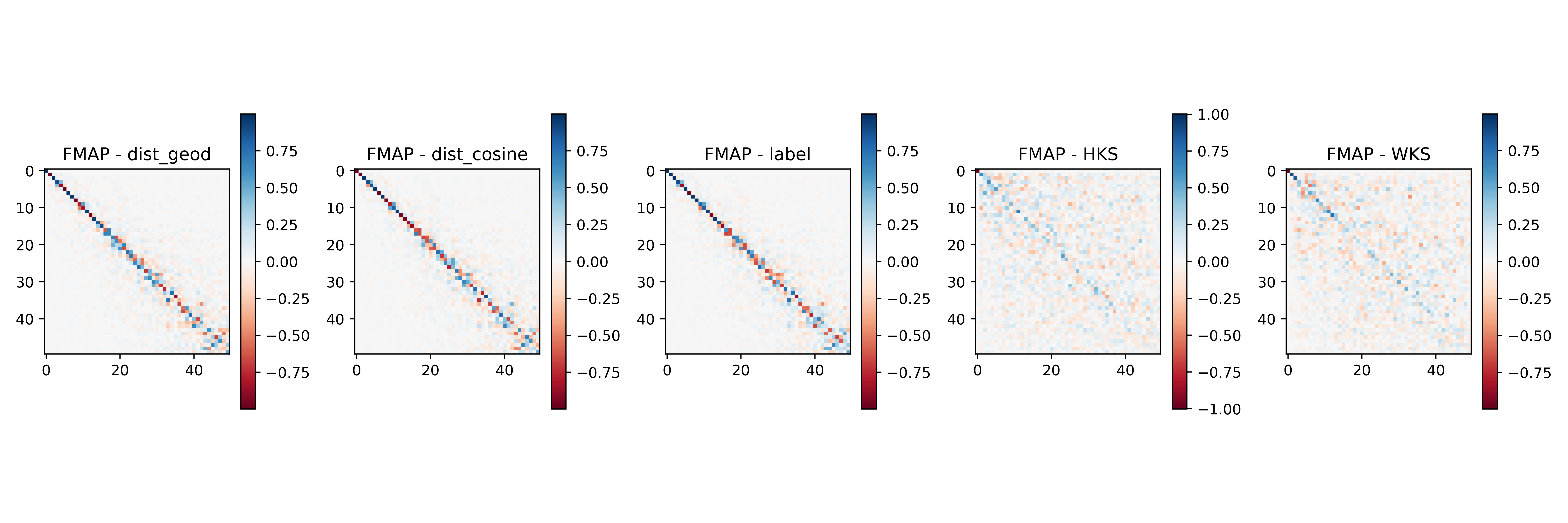

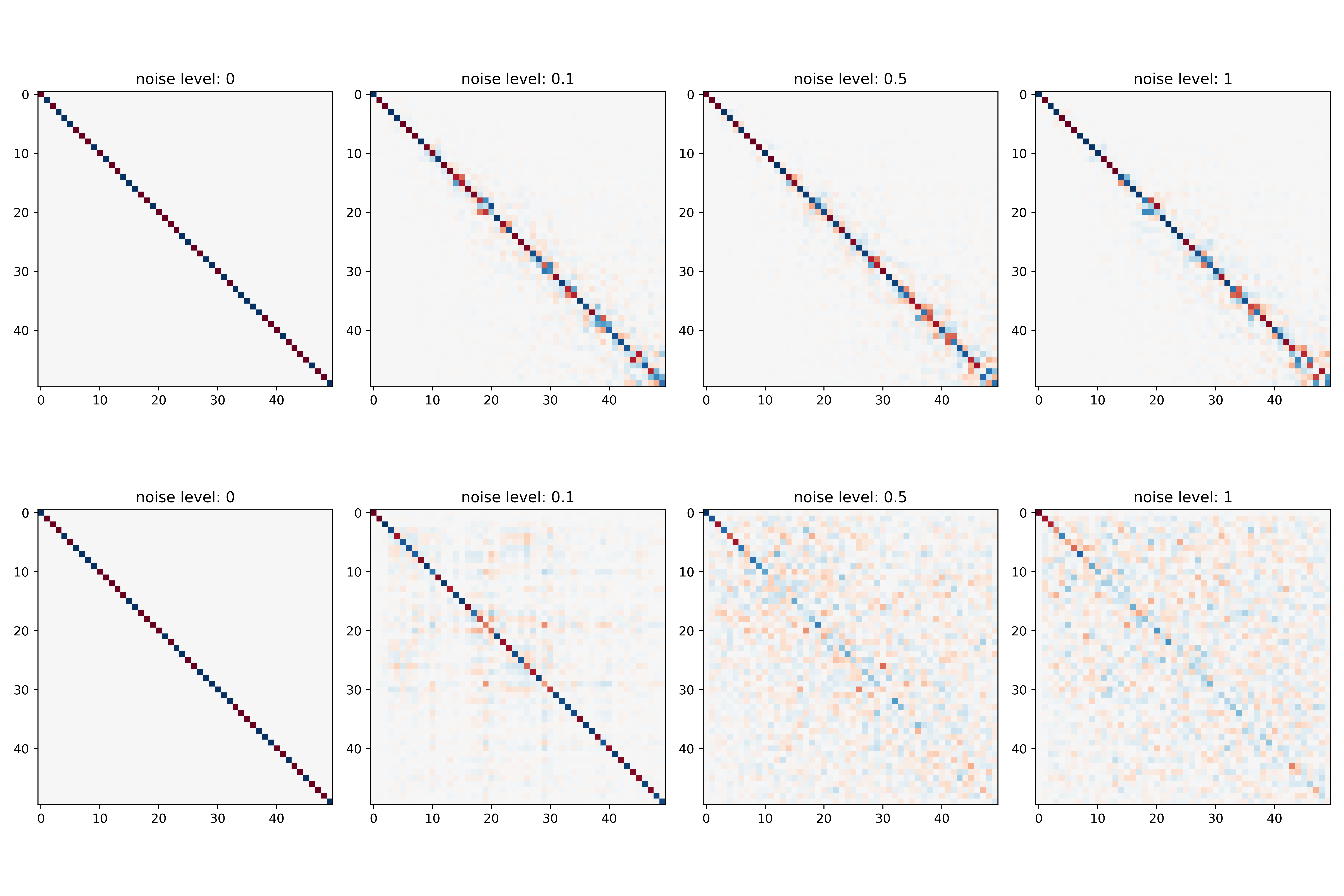

LFM as an Interpretable Similarity Measure

The functional map \(\mathbf{C}\) itself provides a meaningful similarity measure between spaces. For isometric transformations, the functional map is volume-preserving, manifested in an orthogonal \(\mathbf{C}\). We define similarity as:

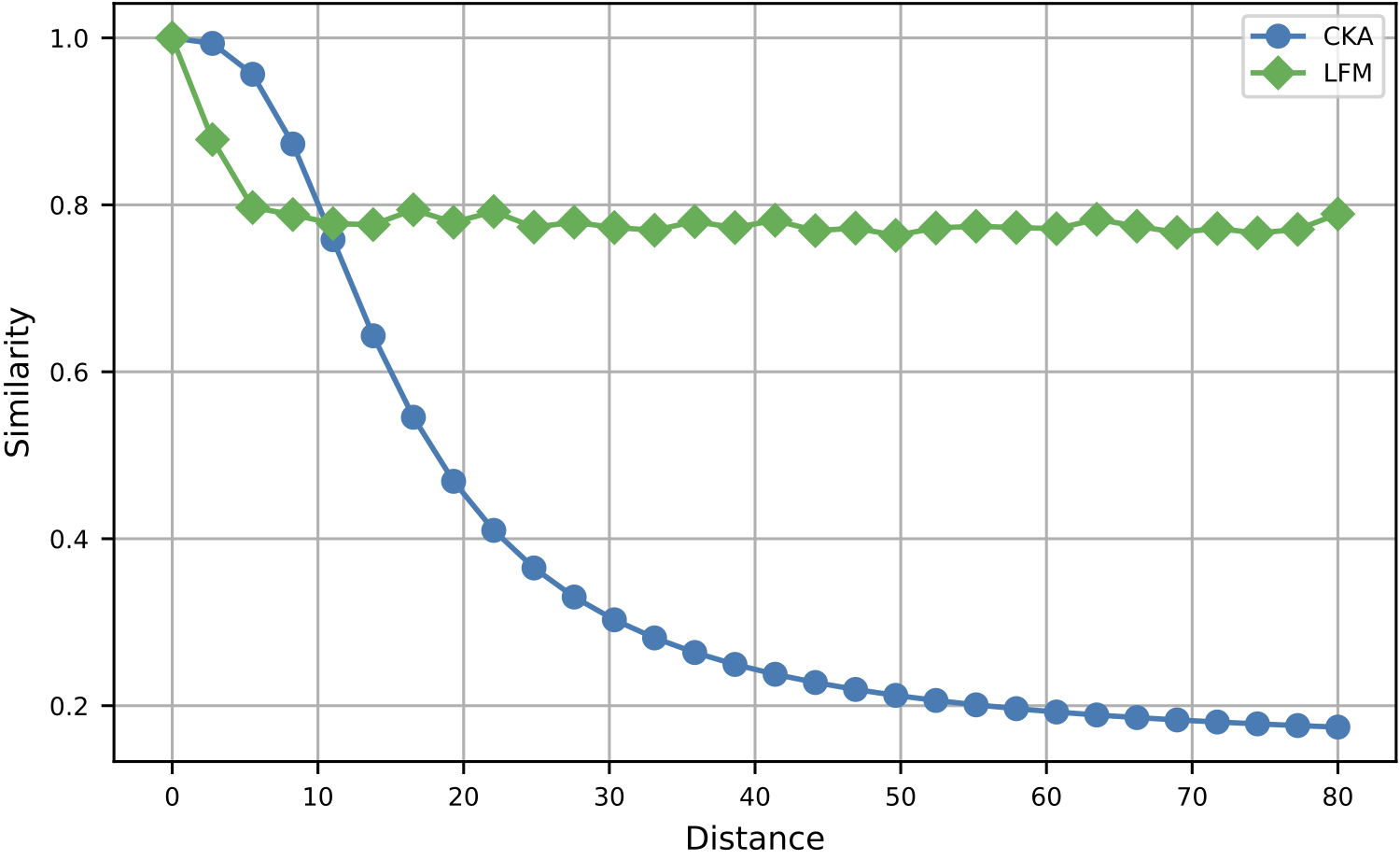

\[\text{sim}(X,Y) = 1 - \frac{\|\text{off}(\mathbf{C}^T\mathbf{C})\|_F^2}{\|\mathbf{C}^T\mathbf{C}\|_F^2}\]This metric is not only more robust than existing methods like CKA (Centered Kernel Alignment) but also interpretable: the eigenvectors of \(\mathbf{C}^T\mathbf{C}\) localize regions of high distortion in the target manifold.

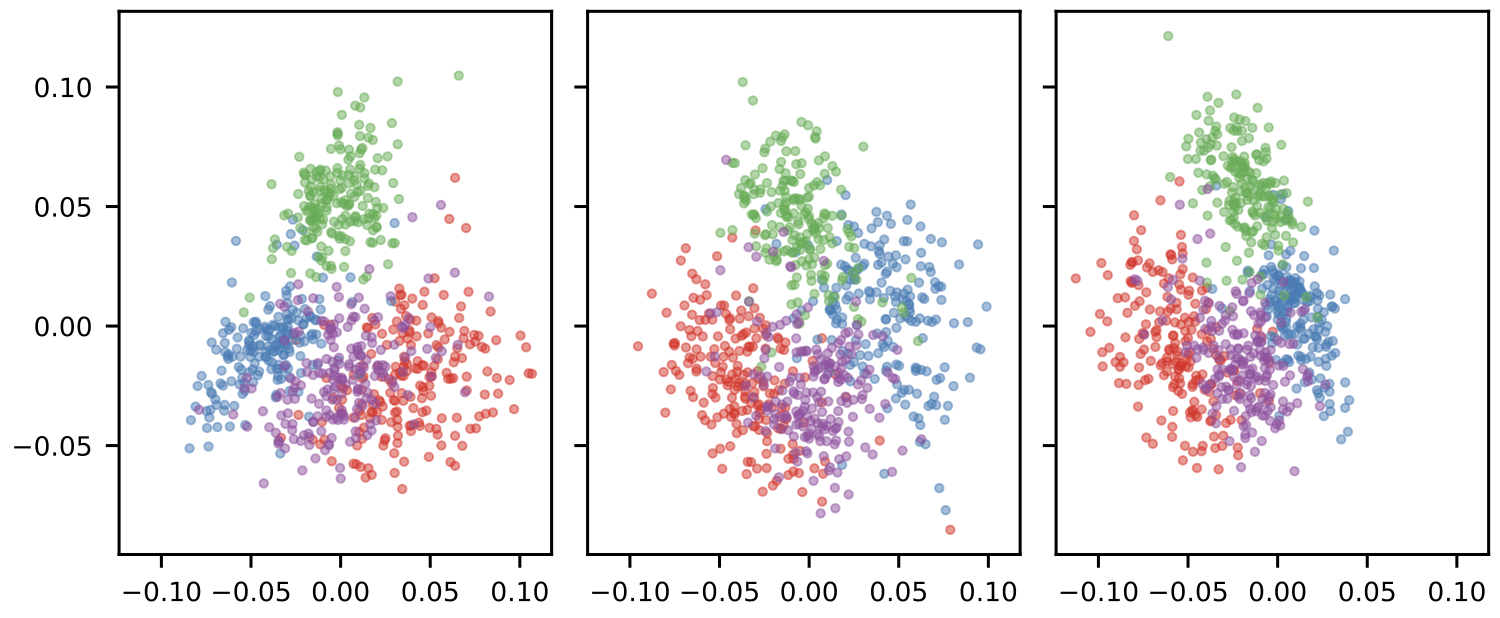

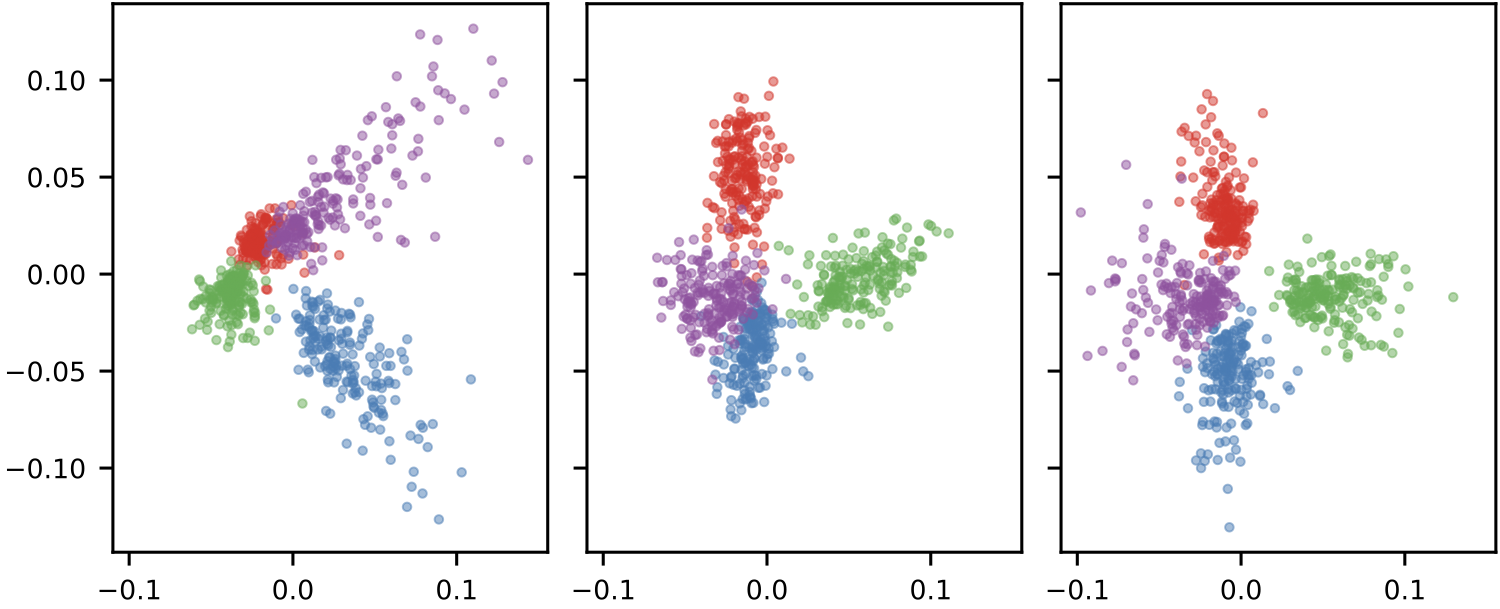

Robustness to Semantically Preserving Transformations

A critical advantage of LFM is its robustness to transformations that preserve the semantic structure of representations. When latent representations are perturbed in directions orthogonal to the decision boundary (preserving linear separability), LFM similarity scores remain high while CKA degrades significantly. This demonstrates that LFM better captures the task-relevant structure of representations.

Experiments and Results

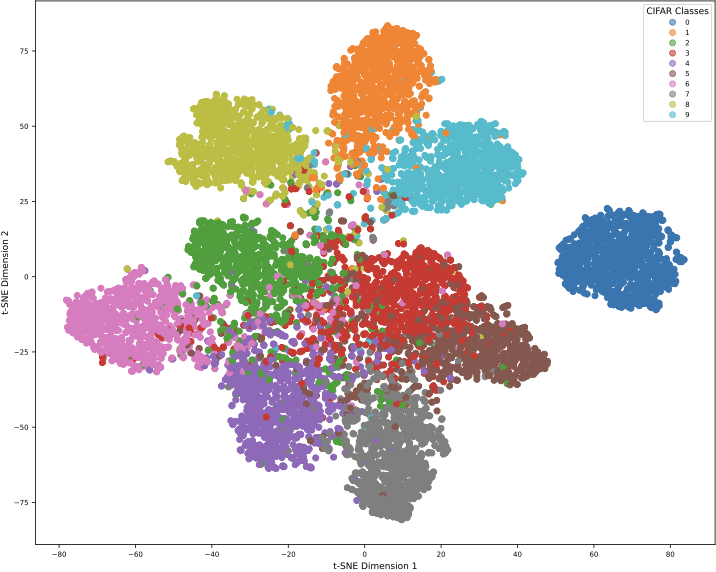

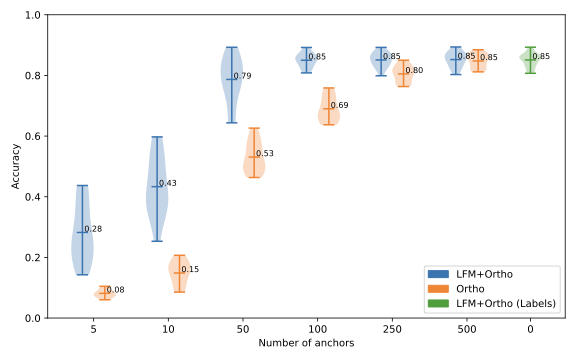

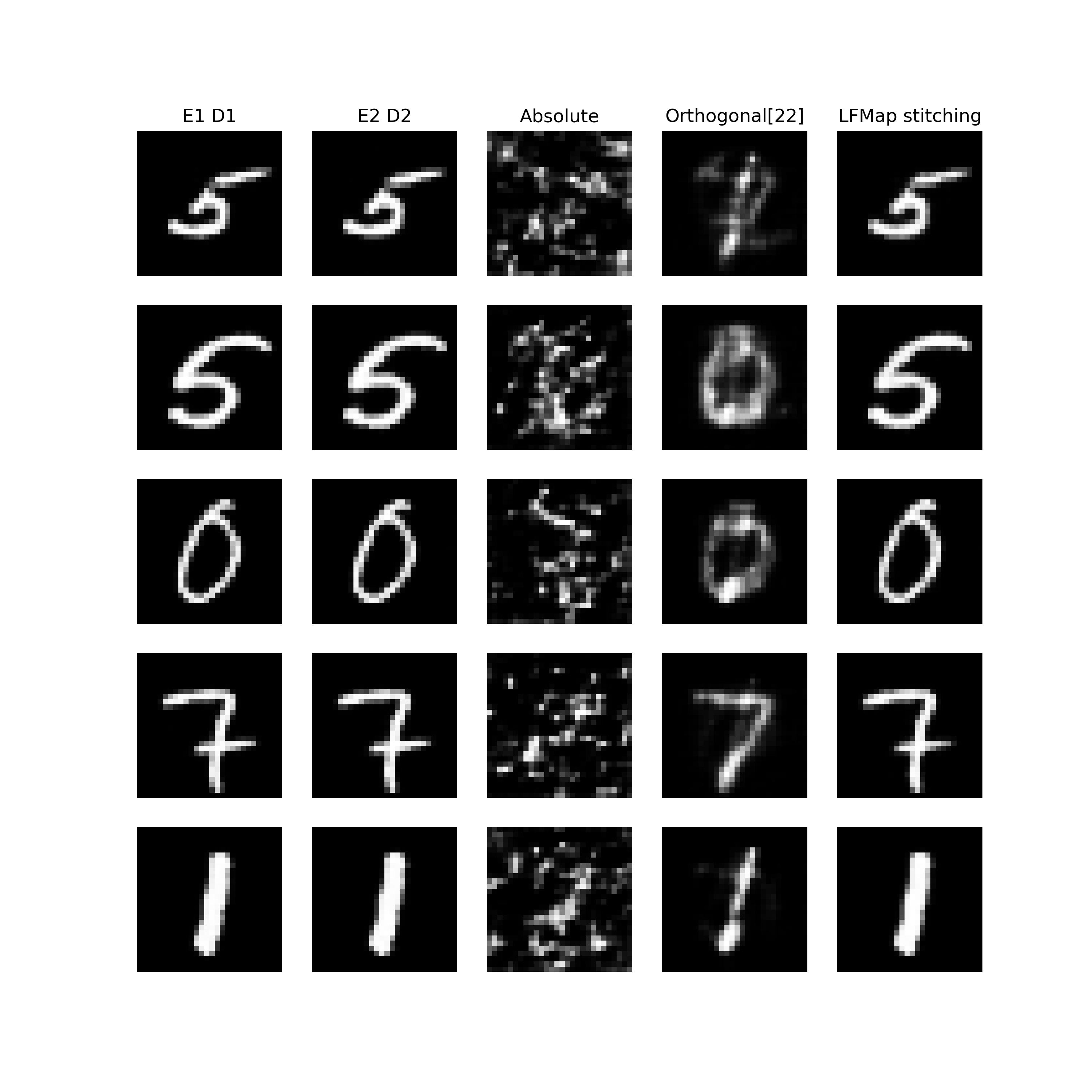

Zero-Shot Stitching

In latent communication tasks, a common challenge is combining an encoder from one model with a decoder from another without any training. LFM enables truly zero-shot stitching - no training, fine-tuning, or pre-trained stitching layers required.

Key findings:

- With just 5-10 anchors, LFM achieves performance comparable to methods using 50+ anchors

- Using only dataset labels (no anchors), LFM achieves strong zero-shot stitching performance

- Results validated across multiple datasets: CIFAR-100, ImageNet, CUB, MNIST, and AgNews

- Works across modalities: both vision and text encoders

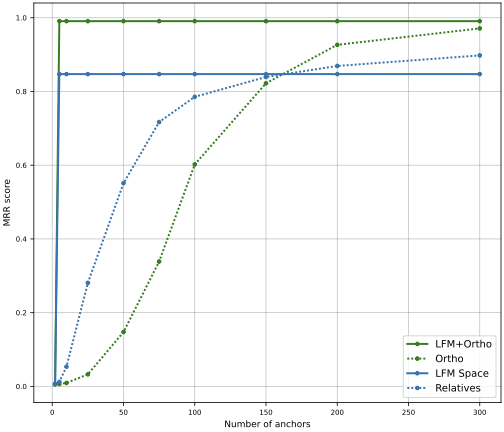

Retrieval Tasks

LFM significantly improves retrieval performance when aligning word embeddings from different models (FastText and Word2Vec):

Key findings:

- With 5 anchors, LFM achieves MRR > 0.8, while baselines remain below 0.4

- Performance plateaus around 25-50 eigenvectors, indicating this is sufficient for optimal alignment

- The functional representation provides an interpretable intermediate space for alignment

Visualizing Functional Maps

Functional Space Visualization

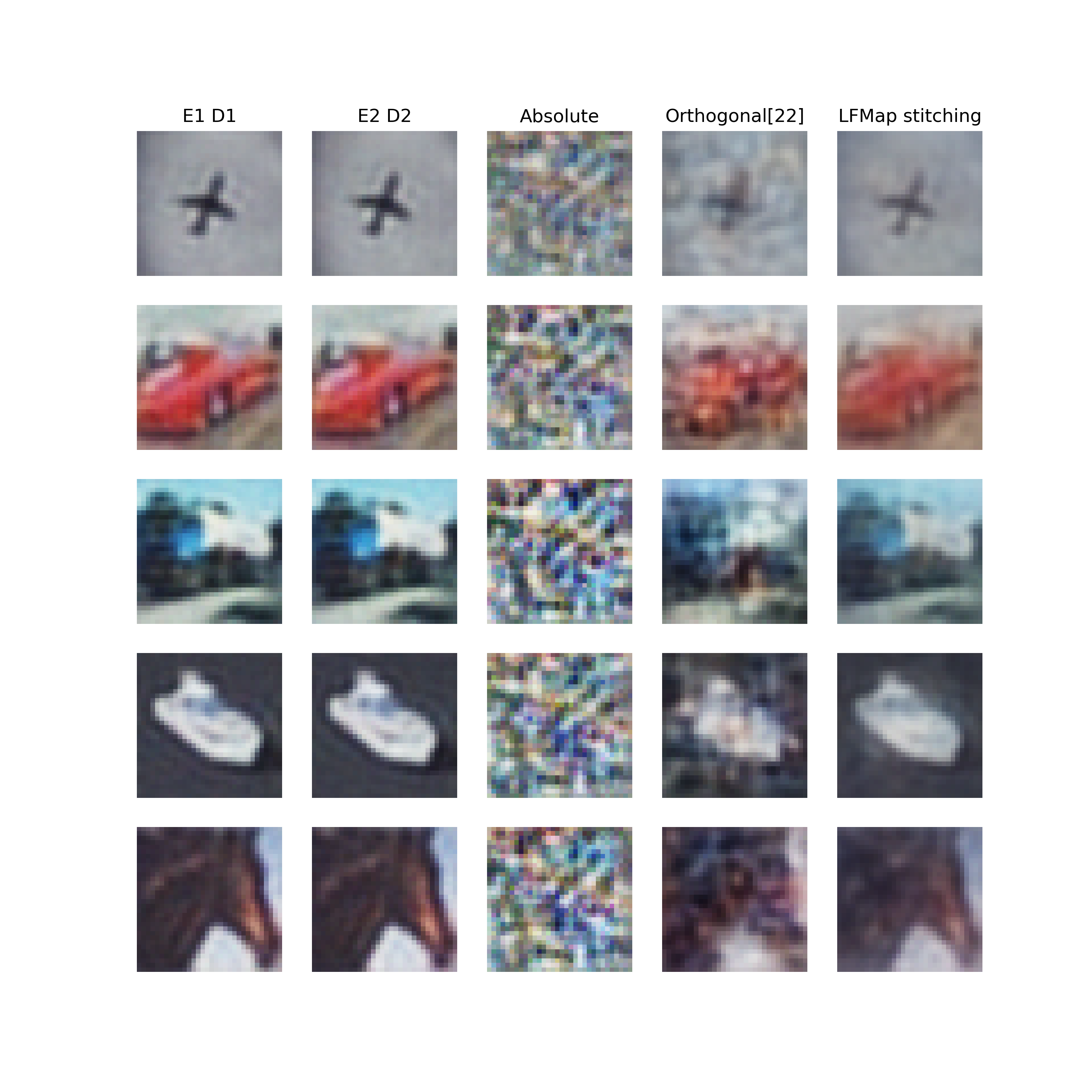

Autoencoder Stitching Applications

LFM enables cross-model autoencoder stitching, where an encoder from one model can be combined with a decoder from another to reconstruct inputs. This demonstrates the framework’s ability to align representational spaces across different architectures.

Key Findings

-

Spectral methods are effective for latent alignment: By working in the functional domain rather than the point domain, LFM simplifies complex alignment problems while enhancing interpretability.

-

Sample efficiency: LFM requires orders of magnitude fewer correspondences (5-10 anchors) compared to traditional methods to achieve strong alignment performance.

-

Multi-purpose framework: Unlike previous methods that address only one aspect of alignment, LFM provides a unified framework for similarity measurement, correspondence finding, and representation transfer.

-

Interpretability: The functional map structure reveals the spectral relationship between spaces, localizes distortions, and provides insights into which frequencies capture task-relevant information.

-

Robustness: LFM is stable to transformations that preserve the semantic structure of representations, making it more reliable than kernel-based methods like CKA.

-

Modality-agnostic: Validated across vision (CNNs, ViTs, DINO) and language (word embeddings) domains, demonstrating broad applicability.

Citation

@inproceedings{fumero2024latent,

title = {Latent Functional Maps: a spectral framework for representation alignment},

author = {Fumero*, Marco and Pegoraro*, Marco and Maiorca, Valentino and

Locatello, Francesco and Rodol{\`a}, Emanuele},

booktitle = {Advances in Neural Information Processing Systems},

year = {2024}

}

Authors

Marco Fumero¹* · Marco Pegoraro²* · Valentino Maiorca² · Francesco Locatello¹ · Emanuele Rodola²

¹Institute of Science and Technology Austria · ²Sapienza University of Rome

*Equal contribution