Head Pursuit

Probing and controlling attention specialization in multimodal transformers

⭐ NeurIPS 2025 Spotlight Mechanistic Interpretability Workshop @ NeurIPS 2025

Overview

Large language and vision-language models exhibit remarkable capabilities, yet their internal mechanisms remain opaque. Head Pursuit reveals that individual attention heads in these models specialize in specific semantic or visual attributes—and that this specialization can be exploited for targeted model control.

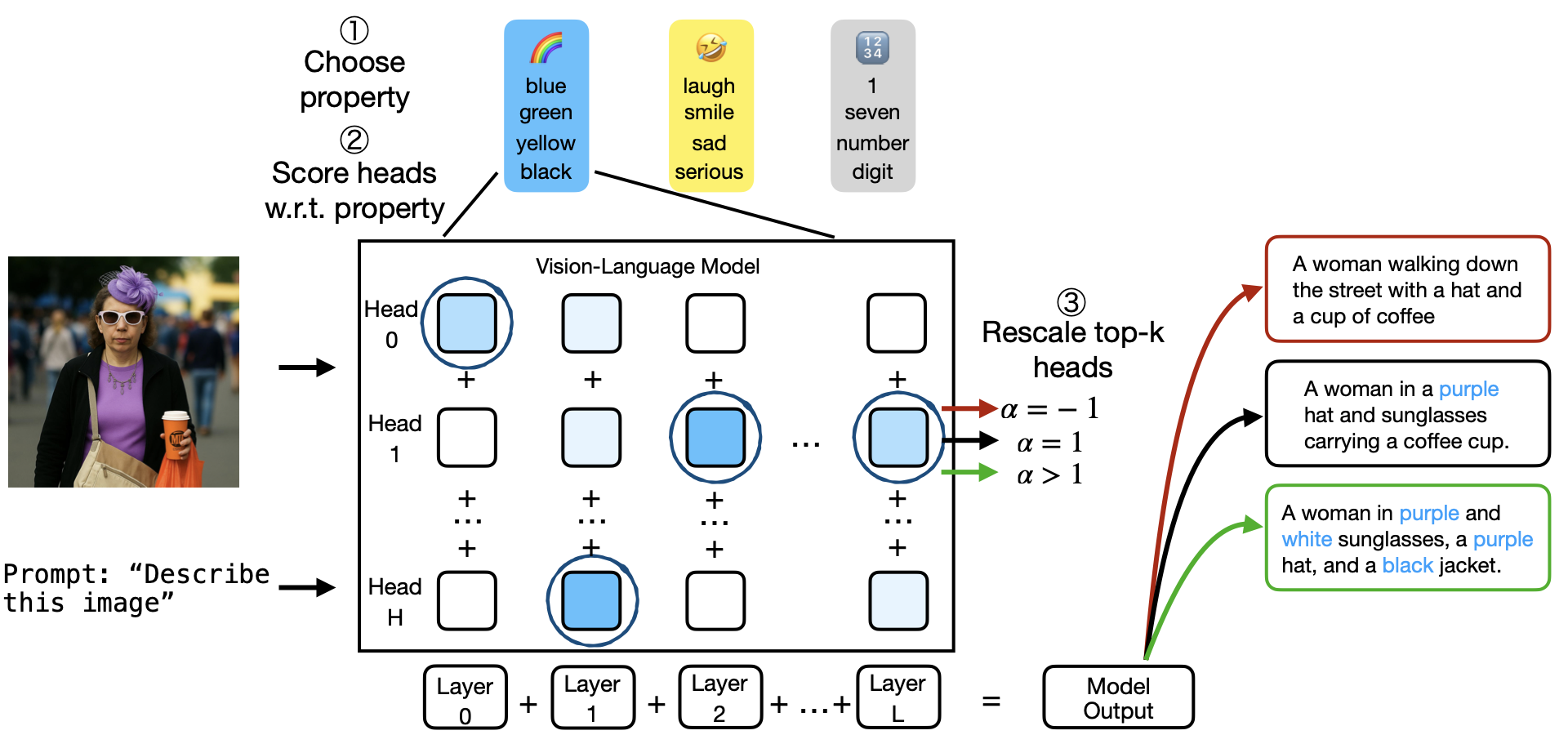

By reinterpreting common interpretability techniques through the lens of sparse signal recovery, we identify attention heads responsible for generating narrow conceptual domains such as colors, numbers, or sentiment. Remarkably, editing as few as 1% of the model’s heads can reliably suppress or enhance targeted concepts in the output, without any additional training.

Method: From Signal Processing to Interpretability

Sparse Recovery Meets the Logit Lens

Our approach builds on a simple observation: the logit lens, a popular interpretability tool, is equivalent to performing a single step of Matching Pursuit on a single example. We generalize this by applying Simultaneous Orthogonal Matching Pursuit (SOMP), a classical sparse coding algorithm, across multiple samples simultaneously.

Given attention head activations \(\mathbf{H} \in \mathbb{R}^{n \times d}\) from \(n\) samples, we decompose them using the model’s unembedding matrix \(\mathbf{D} \in \mathbb{R}^{v \times d}\) as a dictionary:

\[\mathbf{H} \approx \mathbf{W}^* \mathbf{D}\]At each iteration, SOMP selects the dictionary atom (token direction) that maximally correlates with the residual across all samples, constructing a sparse, interpretable representation of each head’s behavior.

Identifying Specialized Heads

To find heads specialized in a target concept (e.g., colors, countries, toxic language), we:

- Restrict the dictionary to tokens related to the target concept

- Apply SOMP to reconstruct head activations using only these concept-specific directions

- Rank heads by the fraction of variance explained by the reconstruction

This provides a principled, data-driven method for identifying which heads are most responsible for generating specific semantic content.

Language Tasks

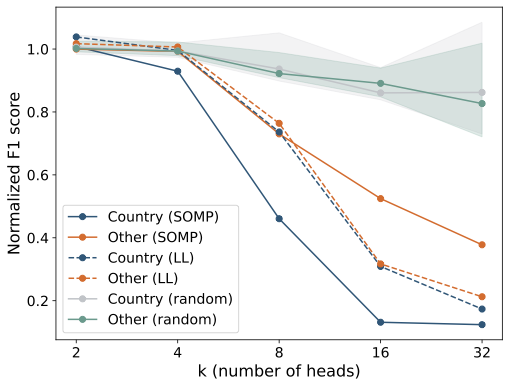

Question Answering on TriviaQA

We evaluate Mistral-7B on TriviaQA, targeting questions whose answers are country names. Intervening on just 8 heads (0.8% of the total) causes targeted performance degradation on country-related questions, while minimally affecting other questions.

Toxicity Mitigation

In a more realistic scenario without complete keyword lists, we identify “toxic heads” using only a partial vocabulary extracted from model responses. Inverting 32 heads reduces toxic content by 34% on RealToxicityPrompts and 51% on TET (measured by a RoBERTa-based toxicity classifier), while random interventions leave toxicity unchanged or increased.

Vision-Language Tasks

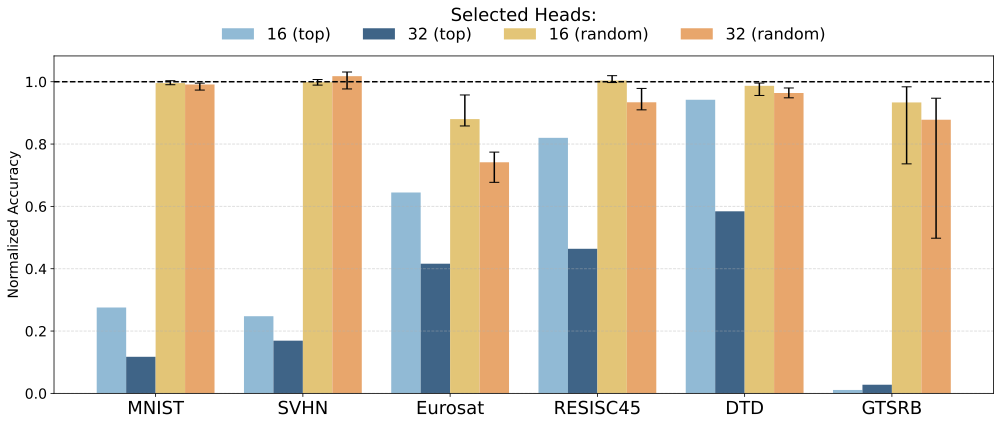

Image Classification

We test LLaVA-NeXT-7B across diverse classification datasets (MNIST, SVHN, EuroSAT, RESISC45, DTD, GTSRB). Inverting 32 heads selected by our method causes substantial accuracy drops, while random interventions at equivalent layers have minimal effect.

Moreover, head selections exhibit semantic structure: datasets with related content (MNIST/SVHN for digits, EuroSAT/RESISC45 for remote sensing) share more specialized heads, and cross-dataset interventions reveal this structure.

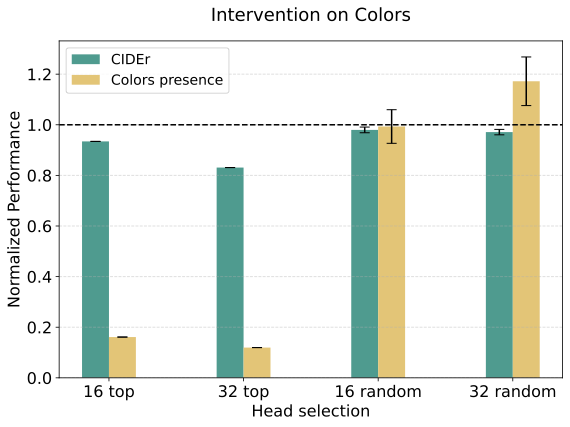

Image Captioning

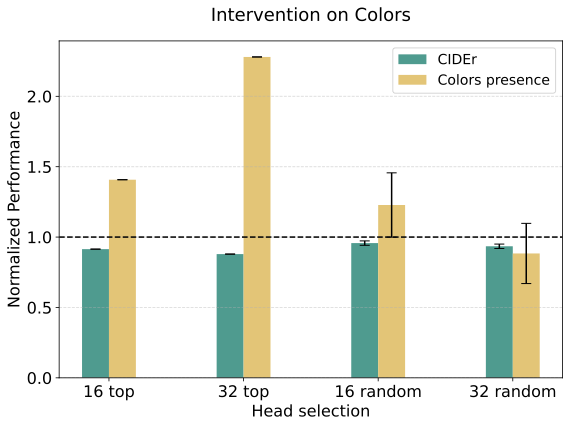

On Flickr30k, we demonstrate bidirectional control over semantic attributes:

- Inhibitory intervention (\(\alpha = -1\)): Almost completely removes color or sentiment words from captions while maintaining overall caption quality (CIDEr score).

- Enhancing intervention (\(\alpha = 5\)): More than doubles the frequency of color or sentiment words with only marginal quality degradation.

Key Findings

-

Head specialization is pervasive: Across language and vision-language models, individual attention heads consistently specialize in narrow semantic domains.

-

Specialization enables control: Intervening on a tiny fraction of heads (≈1%) produces targeted, interpretable changes in model behavior.

-

Semantic structure emerges naturally: Head selections reflect semantic relationships between tasks and concepts.

-

The method is practical: No training required, works with partial concept vocabularies, and scales to large multimodal models.

Citation

@inproceedings{headpursuit,

title = {Head Pursuit: Probing Attention Specialization in Multimodal Transformers},

author = {Basile, Lorenzo and Maiorca, Valentino and Doimo, Diego and

Locatello, Francesco and Cazzaniga, Alberto},

booktitle = {The Thirty-ninth Annual Conference on Neural Information Processing Systems},

year = {2025}

}

Authors

Lorenzo Basile¹ · Valentino Maiorca²’³ · Diego Doimo¹ · Francesco Locatello³ · Alberto Cazzaniga¹

¹Area Science Park, Italy · ²Sapienza University of Rome, Italy · ³Institute of Science and Technology Austria