ASIF

Coupled Data Turns Unimodal Models to Multimodal Without Training

NeurIPS 2023

Overview

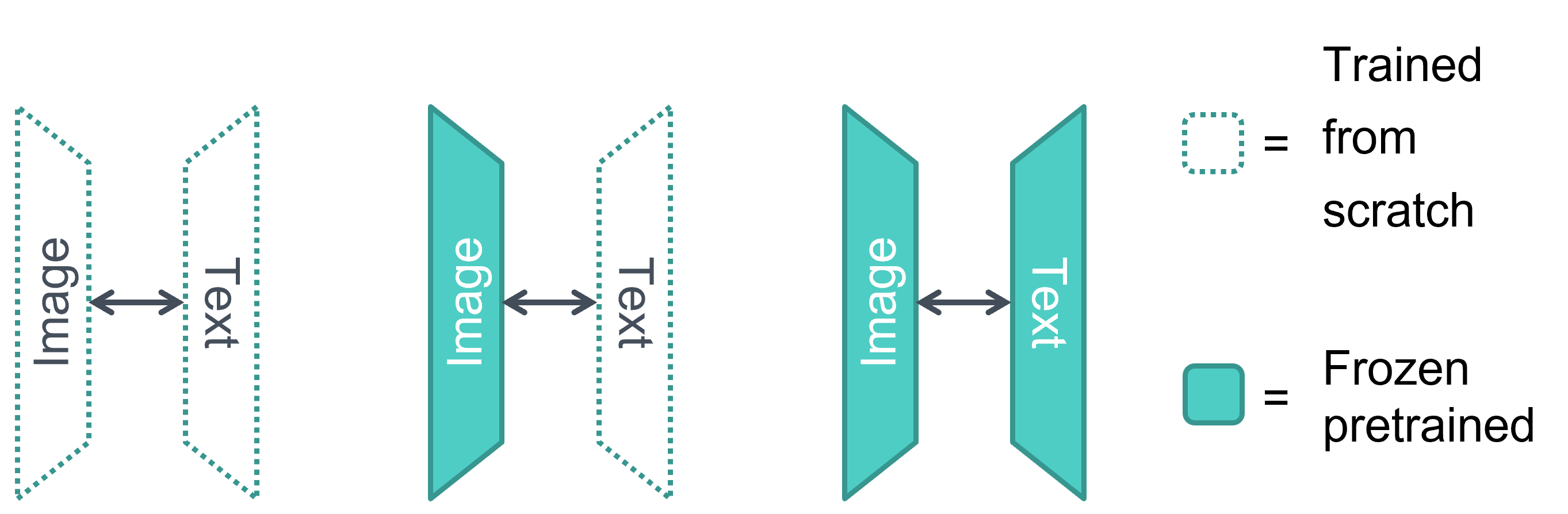

Multimodal models like CLIP have revolutionized computer vision by aligning visual and language spaces, but they require training massive encoders from scratch on hundreds of millions of image-text pairs. ASIF demonstrates a radical alternative: create a common multimodal space without any training at all, using pre-trained unimodal encoders and a much smaller collection of image-text pairs.

The key insight is surprisingly simple: captions of similar images are themselves similar. By representing inputs through their similarities to a collection of ground-truth multimodal pairs—what we call relative representations—we can create a quasi mode-invariant space where images and their captions naturally align.

Method: Relative Representations

The Core Idea

Rather than training encoders to produce aligned embeddings, ASIF uses a collection of image-text pairs as a “Rosetta stone” between modalities. Each new input is represented as its similarities to the same-modality anchors in this collection.

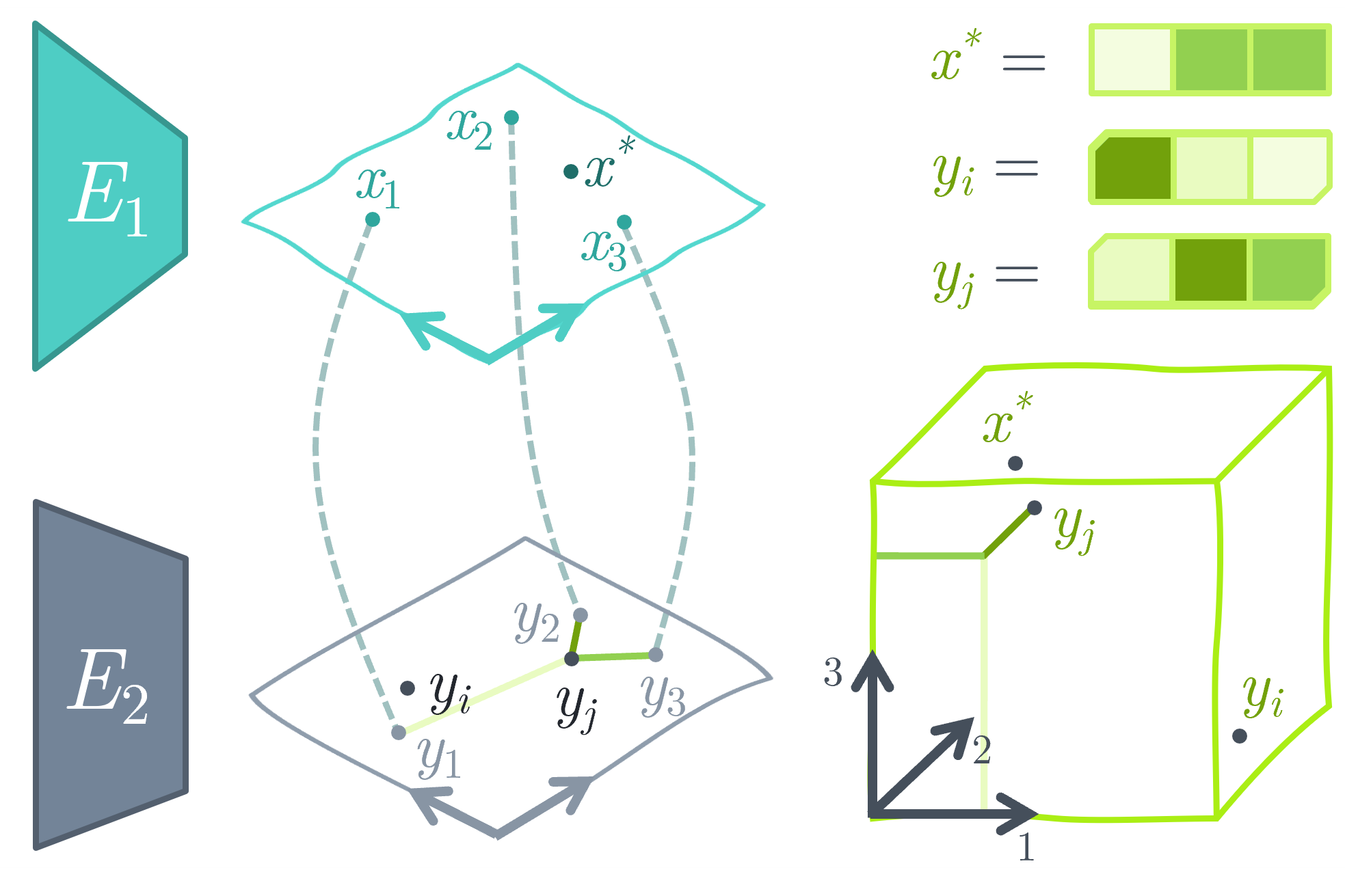

Relative Representation Definition

Given an encoder \(E: X \to \mathbb{R}^d\) and a set of anchor samples \(\{x_1, \dots, x_n\}\), the relative representation of a new sample \(x'\) is the \(n\)-dimensional vector:

\[x' = [\text{sim}(x', x_1), \dots, \text{sim}(x', x_n)]\]where \(\text{sim}\) is a similarity function (e.g., cosine similarity).

The crucial insight: When each anchor exists in two modalities (image and text), relative representations from both modalities live in the same \(n\)-dimensional space, enabling direct comparison between images and captions.

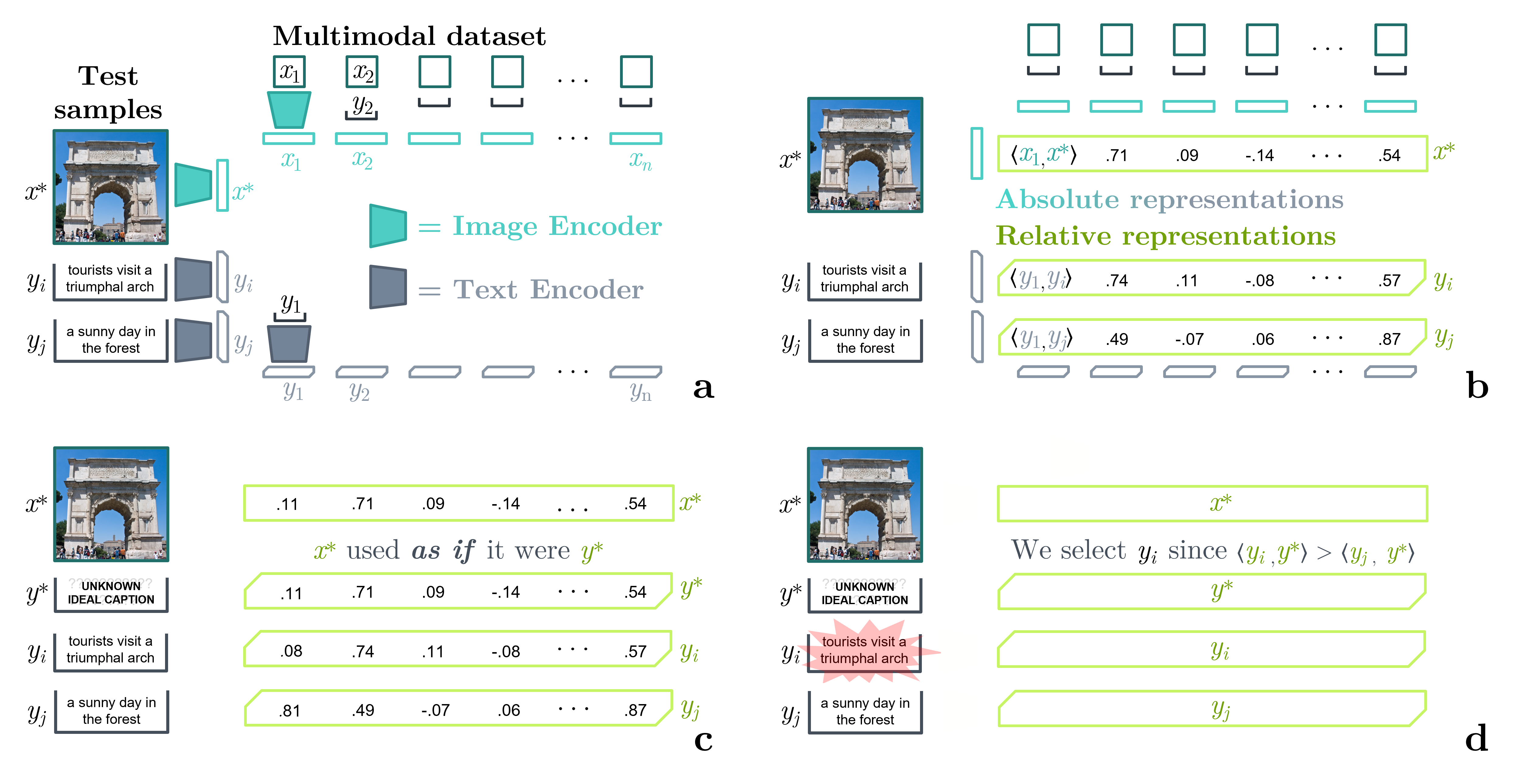

Zero-Shot Classification with ASIF

The ASIF procedure follows these steps:

- Encode the multimodal dataset: Compute embeddings for all image-text pairs using frozen pre-trained encoders

- Process candidate captions: Compute relative representations against the text anchors, keeping only top-$$k$$ similarities and raising them to power $$p$$

- Process query image: Compute its relative representation against the image anchors with the same processing

- Cross-modal inference: Treat the image's relative representation as if it were its ideal caption's representation, then select the candidate caption with highest similarity

Key hyperparameters:

- \(k\): Number of non-zero entries (typically 800 out of millions)

- \(p\): Exponent for similarity weighting (typically 8)

These create sparse, interpretable representations where each dimension corresponds to similarity with a specific training pair.

The Similarity Principle

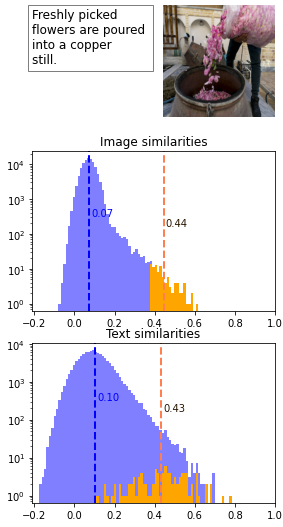

Why does this work? Because captions of similar images are themselves similar.

This natural correlation between visual and textual similarity in good encoders is what enables ASIF’s mode-invariant representations to work without any training.

Experiments

Zero-Shot Classification Performance

We evaluated ASIF against CLIP and LiT on standard benchmarks using only 1.6M image-text pairs from CC12M—up to 250× smaller than prior work:

| Method | Dataset Size | ImageNet | CIFAR100 | Pets | ImageNet-v2 |

|---|---|---|---|---|---|

| CLIP | 400M (private) | 68.6 | 68.7 | 88.9 | - |

| CLIP | 15M (public) | 31.3 | - | - | - |

| LiT | 901M (private) | 70.1 | 70.9 | 88.1 | 61.7 |

| ASIF (supervised) | 1.6M (public) | 60.9 | 50.2 | 81.5 | 52.2 |

| ASIF (unsupervised) | 1.6M (public) | 53.0 | 46.5 | 74.7 | 45.9 |

ASIF achieves competitive performance with a fraction of the data, working even with unsupervised vision encoders (DINO).

Scaling Laws

Performance scales smoothly with dataset size without saturation, even with smaller encoders. This suggests ASIF could benefit from larger multimodal collections while maintaining its training-free approach.

Interpretability

Every ASIF prediction can be traced back to specific training examples. Each dimension in the sparse relative representation corresponds to a unique image-text pair.

This transparency enables:

- Understanding correct and incorrect predictions

- Identifying problematic training data

- Curating better datasets through rapid iteration

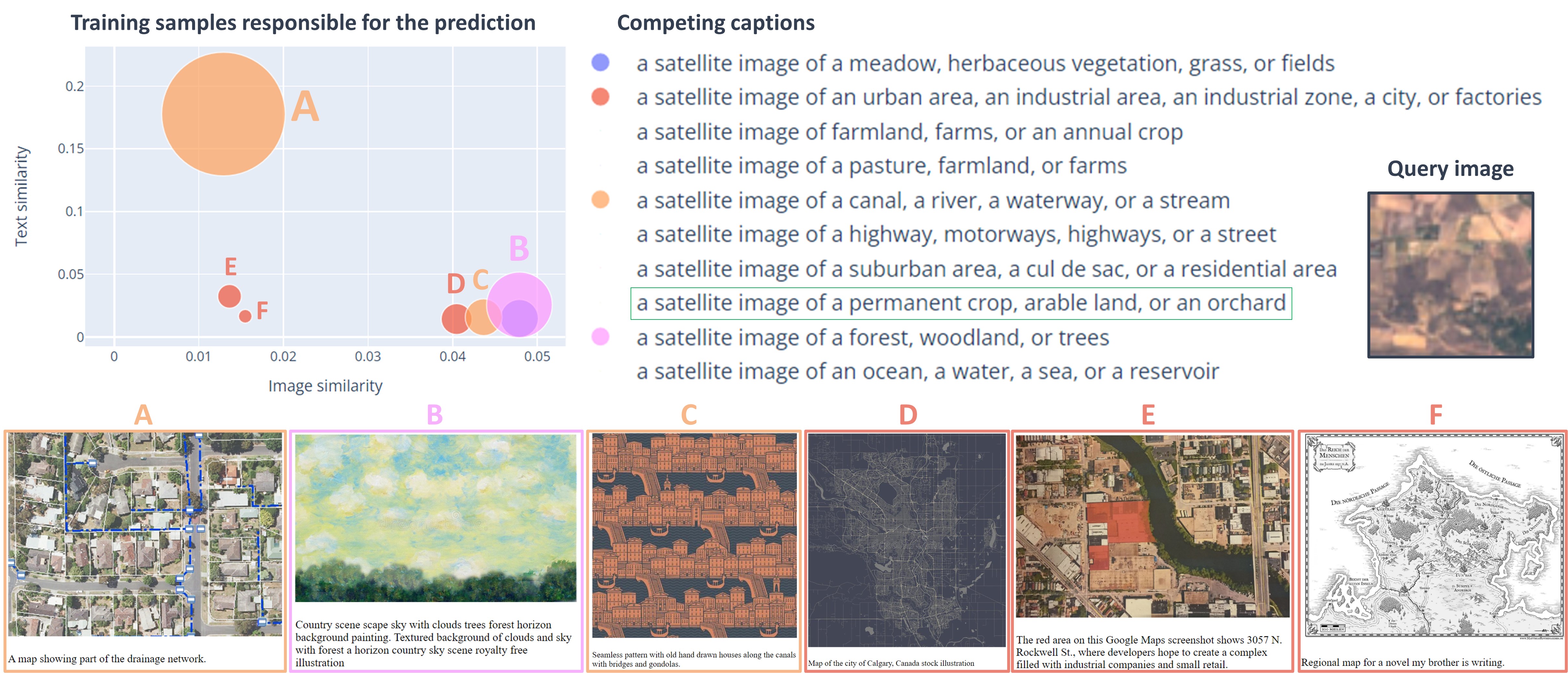

Case Study: EuroSAT Classification

To further demonstrate ASIF’s interpretability, we examine satellite image classification on the EuroSAT dataset. The visualizations below show how ASIF makes predictions by identifying the most similar training samples in both image and text spaces.

These examples highlight the semantic gap between CC12M (general web images) and specialized domains like satellite imagery. Despite this challenge, ASIF’s interpretability makes it easy to diagnose issues and improve performance by adding targeted examples.

Unique Properties

No Training Required ASIF combines independently pre-trained encoders with multimodal data embeddings—no neuron tuning needed. Deploying or updating a model takes seconds, not days.

Data Efficiency By leveraging pre-trained encoders, ASIF requires far less multimodal data. We achieve competitive zero-shot performance with 1.6M pairs versus 400M+ for CLIP.

Radically Data-Centric

- Add new concepts: Encode new image-text pairs and immediately use them

- Remove data: Delete embeddings to remove training samples (crucial for data rights compliance)

- Iterate rapidly: Test different multimodal collections without retraining

Built-in Interpretability Every prediction traces to at most \(k\) training pairs. No expensive attribution methods needed—interpretability is free by construction.

Fast Model Updates Example: ASIF initially achieved 29.4% on EuroSAT (satellite images). Adding just 100 EuroSAT image-text pairs boosted accuracy to 82.2%—in seconds, not hours.

Key Findings

-

Training-free alignment works: Frozen unimodal encoders + coupled data embeddings create effective multimodal models

-

Data efficiency matters: 1.6M pairs achieve competitive results versus models trained on 250× more data

-

Relative representations enable mode-invariance: Similarities to multimodal anchors create naturally aligned spaces

-

Interpretability comes free: Sparse representations directly link predictions to training examples

-

Memory and retrieval rival learning: ASIF blurs the line between learning algorithms and retrieval systems, raising questions about the role of external memory in machine learning

Relation to Other Approaches

vs. k-Nearest Neighbors: Like k-NN, ASIF is non-parametric and requires explicit training data at test time. Unlike k-NN, ASIF performs open-ended classification with arbitrary new captions, functioning as a drop-in CLIP replacement.

vs. Kernel Methods: The relative representation can be viewed as an explicit feature map in a kernel space. However, ASIF emphasizes explicit coordinates for interpretability rather than operating in an implicit feature space.

vs. Prototypical Networks: ASIF aligns with classical few-shot learning approaches that leverage similarity to prototypes, but extends to the multimodal setting without task-specific training.

Implementation Considerations

Scalability: Our non-optimized implementation is less than 2× slower than CLIP. Techniques like product quantization (for memory) and inverse indexing (for speed) could enable scaling to billions of entries using libraries like FAISS.

Memory vs. Computation Trade-off: ASIF avoids training costs but requires storing all anchor embeddings and computing similarities at inference. This trade-off favors scenarios where:

- Training resources are limited

- Models need frequent updates

- Interpretability is critical

- Multimodal data is curated/evolving

Broader Impact

ASIF raises fundamental questions about foundation models:

Data Efficiency: If competitive performance is achievable with 250× less data and no training, how much of large-scale training is essential versus dataset quality?

Learning vs. Retrieval: ASIF satisfies the definition of a learning algorithm (performance improves with more data) yet stores information in an external memory rather than learned parameters. This challenges traditional views of machine learning.

Perception vs. Interpretation: ASIF separates perception (unimodal encoding) from interpretation (relative representation construction). The encoders act as sensors; meaning attribution happens through the multimodal dataset, not neural weights.

Data Rights and Forgetting: Unlike trained models requiring sophisticated unlearning techniques, ASIF enables trivial data removal—simply delete the corresponding embeddings.

Limitations

- Performance still below CLIP/LiT when abundant multimodal data and training resources are available

- Large memory footprint for the anchor embeddings (though compressible via quantization)

- High-dimensional sparse representations may be less suitable for tasks like text-to-image generation

- Limited evaluation on the full spectrum of multimodal tasks (focused on zero-shot classification)

Citation

@inproceedings{norelli2023asif,

title = {ASIF: Coupled Data Turns Unimodal Models to Multimodal Without Training},

author = {Norelli, Antonio and Fumero, Marco and Maiorca, Valentino and

Moschella, Luca and Rodol\`{a}, Emanuele and Locatello, Francesco},

booktitle = {Thirty-seventh Conference on Neural Information Processing Systems},

year = {2023},

url = {https://arxiv.org/abs/2210.01738}

}

Authors

Antonio Norelli¹ · Marco Fumero¹ · Valentino Maiorca¹ · Luca Moschella¹ · Emanuele Rodol๠· Francesco Locatello²

¹Sapienza Università di Roma, Dipartimento di Informatica · ²Institute of Science and Technology Austria (ISTA)

Correspondence: Antonio Norelli (norelli@di.uniroma1.it)